Column Level Lineage

Why do we want it? We want it now!

For about as long as data folks have talked about lineage in data, they have also wished and hoped for Column Level Lineage.

What is Callum Column Level Lineage (CLL)?

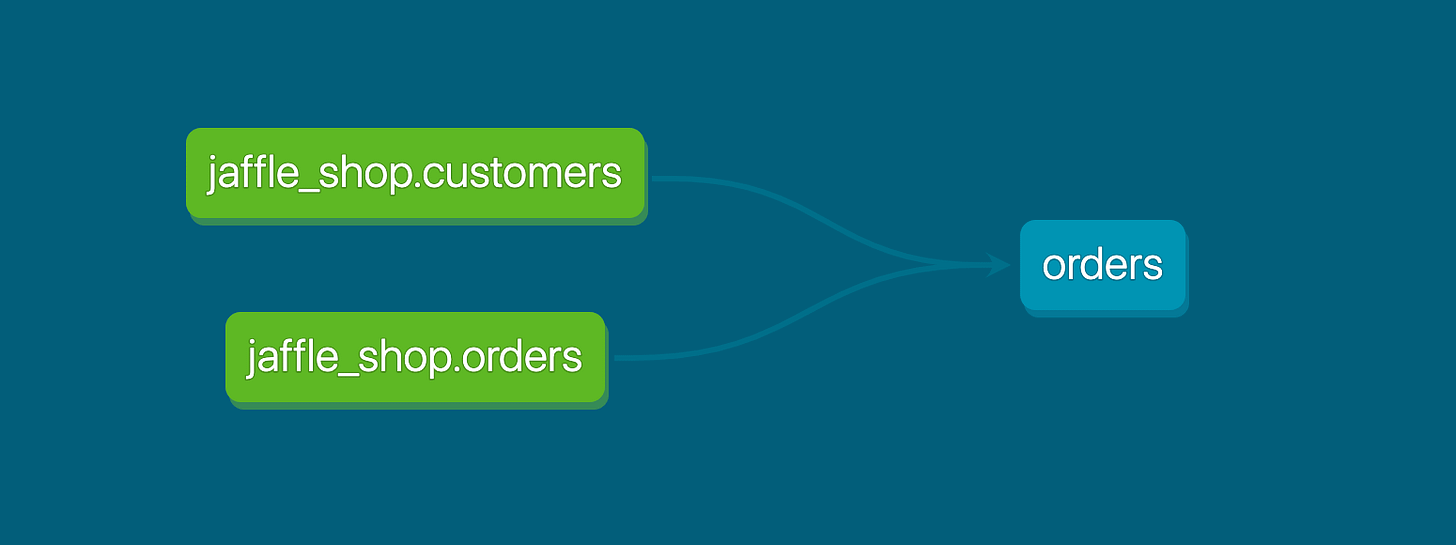

In data, lineage describes the provenance of where data came from. Let’s take this now famous dbt dag:

The orders table has two source tables upstream in its lineage. Even at table or entity level, lineage is very useful. Often when data transformation jobs fail, it is caused by a problem with one node in a DAG that has a cascade failure effect on everything downstream in its lineage. Knowing that a particular node was the problem massively reduces the ‘needle in haystack’ work that Data and Analytics Engineers have to do.

Column (or field) Level Lineage goes one step further down and looks at the fields or columns in the tables and how they flow from one table into another.

Even though column level lineage suggests that it is confined to within tables and therefore databases, if you include fields in semi-structured data, it can spread much wider into pretty much any system that generates data.

Why is it hard?

dbt’s ref() function has made table level lineage table-stakes now. Yet, dbt Labs have yet to commit to delivering CLL, even though they have had table level since inception. Why? Probably because it is much more complex than table level.

With table level lineage, you look at the create/insert/merge/update statement of one table and any table referenced is therefore upstream in its lineage.

CLL is more complex:

Aggregations of columns and fields can mean that the same fields in a select statement mean different things in lineage. eg

select order_date, order_idVSselect order_date, count(distinct order_id) as orders. In both cases, order_id is in the column level lineage but meaning different things.In which part of the query statement is the column used? (Some tools offer direct or indirect CLL as a choice to only allow select statement fields in lineage.)

A column can be used as a filter in a ‘where’ clause of a query and not show up in the ‘select’ statement… this column therefore won’t feed through the lineage although it has had an affect on the lineage of the next table. How do you treat this or describe this?

A column can be used in a ‘join’ and not in the ‘select’ statement - again, this won’t feed through the column level lineage, but it has impacted on the lineage of the next table.

New features of SQL, like ‘qualify’ statements, can use columns that don’t exist in the ‘select’ statement but again impact on the lineage of the next table.

Columns are frequently aliased and can have many different names throughout one lineage that persist in a way that aliasing tables doesn’t.

The use of CTEs and subqueries can mean that one query can have n layers of CLL.

Many databases have syntactic sugar to deal with things like parsing fields out of JSON, which adds to the complexity of generating column level lineage.

This is just some of the difficulty - others could probably describe many other edge cases.

Add to this complexity, that most databases have slightly different SQL syntax to each other…

Why do we want it?

Before I say my piece on this, I decided to play devil’s advocate and ask some folks in substack chat and data-folks.masto.host:

Mariah Rogers (I feel like there is blood, sweat and tears baked into this quote… she’s available for a new role, don’t miss out!)

@jayatid

I want my column documentation to be inheritable from SRC to MARTI want my analysts and business stakeholders to be able to look up a column on a report that they have questions about and know EXACTLY what source system/table it originated from, whether it got manipulated/overwritten somewhere along the way, how the metric was calculated, etc.

Babak Tourani

@jayatid Where does "this value" come from? Directly from a source (through proxies, potentially) or it has been manipulated and/or aggregated along the way? I totally understand that it's not easy to provide such formulated explanation systematically. The manual alternative (that could happen frequently in response to "this value doesn't look right" ) is messy, too.

Gabe Schenz

@jayatid I want a recommendation engine that will allow me to know if a query I'm writing is satisfied by some table already.

@jayatid I want a report of dataset and data process duplication so that I can start deleting things aggressively.

@jayatid I want to maximize the work not done for data teams who are already being pushed to their limits.

Erik Edelmann

@jayatid I’m with other folks here, there’s so much you could say, but even some of the simplest things that basic column level lineage could unlock would be massive, like just helping to know what to delete. Love deleting things. I believe @sarahkrasnik does too

Doga Kerestecioglu

@jayatid understand what my metric is telling me.

Jonathan Barone

If you have table level lineage + you dry run queries in CI then I think column level becomes a nice to have feature

Henning Holgersen

A little too frequently I hear “the value in this column is wrong” or something to that effect. Tracing back stuff like that is kind of the poster child for column-level lineage, and would have saved me time. The question is though, do I spend enough time doing it for the feature to make sense? Maybe not. Are the errors time-sensitive enough that I really need it to speed up the time to a fix? Maybe not.

I am mostly responsible for data ingest, so tracing back from a dim to check a source can be time consuming, and I don’t have enough domain knowledge to guess correctly where some particular column comes from.

Some common themes emerge:

Understanding inheritance/lineage of where a field came from

Understanding how a field has been manipulated/edited from its source fields at any given stage of lineage

Helping to reduce duplication of data created by understanding similarity. (I’ve often thought that having a dbt “similar” command would be really cool to tell you whether a model was similar to another based on lineage and grain)

Making the developer experience for data teams better

Understanding the true meaning of a metric

At Metaplane, when we’ve discussed the value of CLL, we’ve thought about these same principles in the context of dealing with data incidents and helping customers choose what to monitor:

What is the root cause of this data issue? How do we get to it faster?

Understanding CLL often allows you to trace back silent data issues one step further back immediately without another investigation step. eg Why did this column start to contain empty strings? It came from this event field where the Product Engineer changed the logic to occasionally send empty strings in the field, instead of not including it. CLL can potentially allow you to trace to a source system where table or entity level won’t; sometimes the input sources for the fields in a table or payload are actually different systems altogether

All impact analysis will be more accurate and targeted, reducing alert fatigue

Higher resolution lineage visualisation

Entity importance scoring will be more accurate, as we will know which fields are feeding the most used metrics/dashboards/rETL syncs

The reason I played devil’s advocate, is that I feel you can get 80% of the benefit of CLL just with good table level lineage and CI processes, as Jonathan described above.

Don’t get me wrong - I love the idea of CLL as much as the next data person, it’s just that, if I’m being ruthless… how much more would I actually pay to have it in a data tool? I’ve heard so many data leaders say they want it, but when the time comes to pay up, they baulk. I don’t believe it’s a standalone tool in its own right - it belongs integrated into another system, making that system better.

It would really be ideal for it to be part of dbt... given this is where most of the choices defining CLL are made. Would it be too onerous to have a column level ref() function? Perhaps one that abstracted the functions that apply treatment to input columns, to generate an output column?

{{column_ref.sum(relation.column)}}

{{column_ref.row_number(relation.column, partition_by:[relation.column, relation.column], order_by: [relation.column])}}

{{column_ref.case_when(inputs:[relation.column...], function: macro_name)}}

Where columns are used in joins and where clauses that could cause filtering:

{{column_ref.join_on(relation1.column, relation2.column)}}

{{column_ref.where(relation.column, operator=greater_than, value=’2022-11-01’)

This is actually similar to how some of the functions in dbt_utils work today:

{{ dbt_utils.unpivot( relation=ref('table_name'), cast_to='datatype', exclude=[<list of columns to exclude from unpivot>], remove=[<list of columns to remove>], field_name=<column name for field>, value_name=<column name for value> ) }}

It does result in introducing much more Jinja into our lives this way though 😶... models will be more Jinja than SQL for sure, on this basis. However, DWH migration would become very easy.

As I mentioned above, there is already an active thread on CLL on my subscriber chat, come join us! There is now an Android Substack app too.

On that note, I wish you a very happy Christmas and hopefully some time away from work 😉🎄💜.

Oh god that {{ column_ref }} thing looks terrifying lol. Hadn't heard the different perspectives around this, thanks for sharing :)

(Side note: If you're all building this, IIRC sqlparser-rs got us pretty far, albeit back in 2020 https://github.com/sqlparser-rs/sqlparser-rs)