Consolidation by other means

Could integrations and curated stacks make mass acquisitions unnecessary?

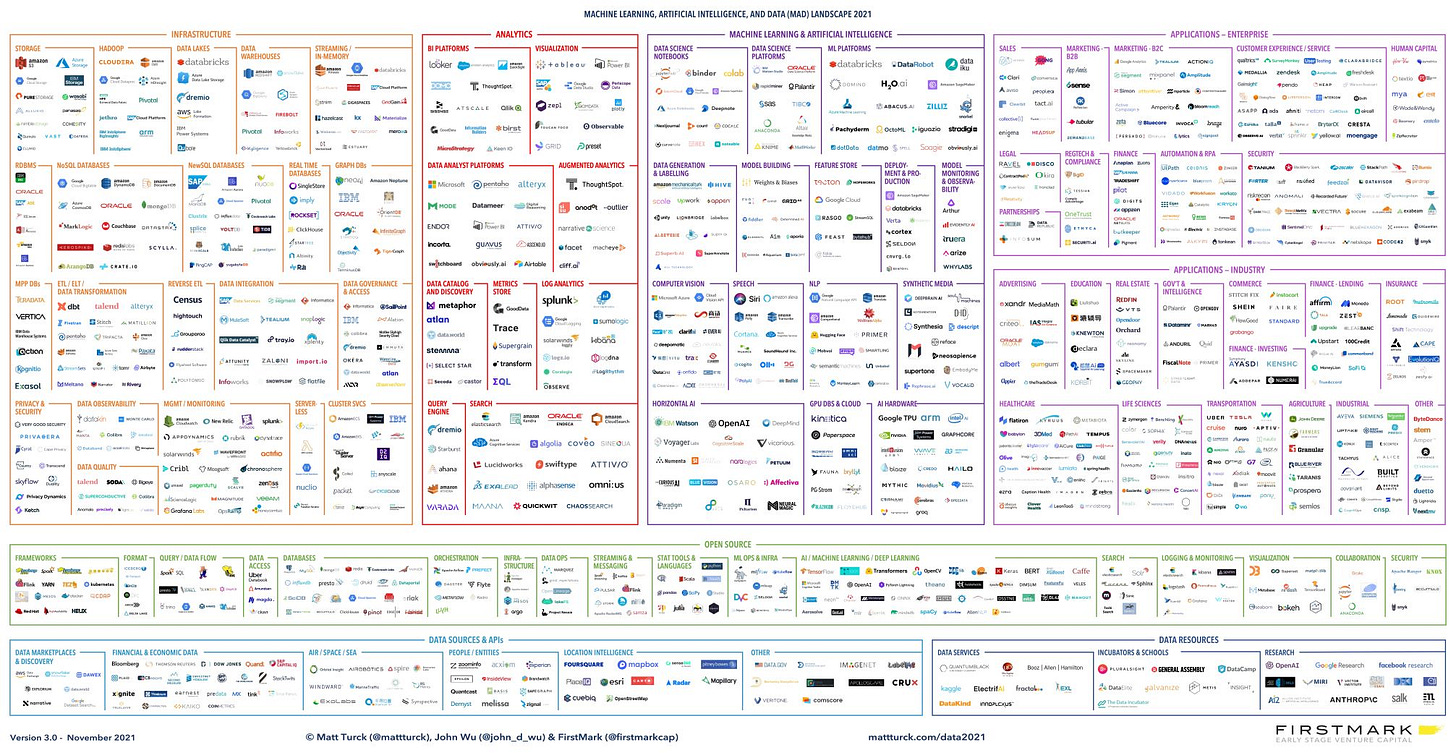

Many VCs and founders in the data space have predicted a great consolidation after the explosion of “Modern Data Stack” companies.

With the huge investment in the space in 2020 and 2021, there have been many new entrants to the market. Whilst some of these companies are possibly too narrow in scope to survive, some will pivot and expand their footprint and others are solid companies, aiming to be best of breed in their niche. Even so, more companies won’t make it; such is the nature of early stage companies. Especially with the high valuations from frothy rounds coming back down to earth, it will be difficult for them to raise money, with profitability way over the horizon.

However, there are many companies who are truly innovative, who are gearing up to weather the harsh times ahead. They have solid products and are pushing hard on go-to-market to prove their value… and yet it’s still not easy. Customers aren’t signing up in their droves, with little to no sales motion - they’re having to grind it out: Product Led Growth is turning into Product Led Sales in order to “anneal” the market. Famous founders “personally” email you repeatedly to shill their product.

Content marketing isn’t so effective when there is a vast quantity of it - it’s as if there isn’t any content at all… it’s just noise. Attention is finite: when the first few companies did content marketing, it worked and they won a big part of the mind share. Now that most of the companies above are doing it, there is just a sliver to be had - the medium to long term uptick in organic leads from your /blog content repository isn’t coming. There is still value to be gained from having content for SEO, but really you’re then writing content for a machine to read first and decide whether or not it’s important… no wonder this kind of content isn’t the most engaging.

Another Way

So, why aren’t customers looking to buy the full gamut of tools above? Sure, budgets are tighter now and it’s as good a thing to blame as any, but perhaps what’s more problematic is actually the mind share. If you have to manage tens of vendors, with the QBRs, the renewals, the discount negotiations, the exec sign offs, the comparisons, the rollouts…. where is the time to do anything else? Can you even do the work to manage all the vendors? Even with no rules it was still a lot of work.

However, I’m not convinced we want to be given a bundled stack from one vendor, let alone even one cloud provider - if some enterprise architect wants me to eat off one menu for the rest of my days, I will vomit in his face and leave the restaurant.

I hope that what could be possible is looser than a conglomerate, but have most of the benefits. Perhaps it’s a siphonophore - a colony of tools working together for a common purpose.

Why doesn’t it work exactly like this now? The problems I’ve described above regarding mind share are due to the fact that you have to have a relationship with each vendor in your stack, and separate billing on a different cycle etc… This is why enterprise architects like you to buy everything off a Seattle restaurant’s menu - it’s easier to manage and they can get group discount, unified billing and RBAC/security.

What if we could get these benefits without being restricted to the usually mediocre fare that’s on the menu? What if we could wander around a curated indoor market which had individual stalls, each specialised in doing one thing really well; one vendor making the very best bao buns, one making the best jerk chicken, another doing Argentinian chimichurri steak and so on. You would still pay one bill at the end, and all the vendors inside had to meet the same standards.

Due to the gravity of the Modern Data Stack being directed towards the data warehouse and dbt, data and metadata is, for the first time in the data profession's history, easily accessible and by all tools in the stack.

ELT vendors, like Fivetran, take data from most sources you want, put it into the data warehouse and run dbt transformations on this data.

Consumption layer tools, including tools like Lightdash, Mode, Hex, Count, Thoughtspot, Hightouch, Census, all can access the data warehouse, increasingly ingest dbt metadata and are triggered by dbt cloud webhooks.

Orchestrators, like Dagster, are able to orchestrate how the stack runs together, while observability tools, like Metaplane, watch for issues and alert. All of this runs on the same core infra - the data warehouse, dbt and how all of these tools integrate with both of them.

The final difficulties are now around accessibility, billing and tool management. There are a new breed of aggregators which provide you with unified billing, a curated or well-integrated choice of stack and unified access control. Could this be the final piece of the puzzle that helps the Modern Data Stack cross the chasm?

These sorts of aggregators have been referred to as DataOps tooling or a Data OS before - whatever they’re called, they feel like a good adjacent investment space for investors who have already invested in MDS tooling that now needs to go-to-market. Could companies such as 5X be these pre-curated, pre-integrated markets for data tooling?

5X take the approach of giving the buyer full visibility and choice for every piece of the stack, and yet guaranteeing interoperability, integration, combined billing and unified access control.

Y42 takes the approach of mostly choosing the stack for you, but exposing what the tooling is and allowing a deep level of configurability. There are others who take the curation approach, without exposing what underlying tooling is used - this is somewhat concerning. Just as data folks want to understand the provenance of data, they want to understand the composition of their tech stack, too.

The joy of 5X and Y42’s approaches is that you know what tools are being used and can therefore understand their features and technical quality up front. If these choices are hidden, you can’t be sure that the tooling used is high quality - it’s a case of having to trust a single vendor like in the pre-Big Data era, where you would choose full MS/Oracle/Teradata stacks. I’m not going back to that.

However, if I was to start a new data function, at a smaller org, with simpler data needs, where it didn’t make sense to hire a data team… using something like 5X or Y42 would have its appeal. My time to value would be much lower than if I set up the stack myself, it would be more feasible for someone in the company to pick up if I left and a lot of boilerplate code wouldn’t need to be written.

Now consider someone who was less technically able, who wouldn’t have been able to set up a data stack by themselves anyhow; possibly someone from the other side of the chasm. They may not even be able to know from the outset what tooling they needed at the end of the project. They might have a rigid budget, where they can’t tack things on as needed at a later date. The appeal is greater still.

Thanks for introducing these products. As an MDS-savvy person, I see that they solve a real problem. I'm curious how hard it's and how they'll tackle the challenge of reaching the companies without the expertise and convincing them of such tools' positive cost/value balance in the current business conditions.