There has been a lot of talk about the value of data.

From Tristan’s last post (slightly trimmed):

I’m going to say something that some of you may not want to hear: I think that there is too little scrutiny of the value of data teams today.

Careers are made out of producing value for the business. And that requires tough choices and hard conversations.

We’ve historically had very hand-wave-y conversations about the ROI of data teams. Certainly, we aren’t about to start attributing dollars of profit to individual feats of analytical heroics—that shouldn’t be the goal. But the honest truth is that no organization can do this perfectly.

Instead, every functional area of the business has certain accepted metrics and benchmarks that they use to evaluate efficiency / ROI. These are often framed as a percent of revenue (for cost centers) or a percent of new sales / bookings (for revenue-generating functions). Some are framed on a per-employee basis (for example, IT).

For now, I don’t want to try to make a coherent argument as to exactly how this could or should be done, but I do think we should (and will be forced to!) start doing more here.

I would propose a way of doing this, similar to how goals and assists work in sport. Often with data, you’re helping another team, usually a revenue centre (RC), to optimise.

One way you could share in the wins is to shoulder some of the RC's targets as a data team. This reduces the RC’s targets and the data team itself becomes a RC. The problem with this is the need to partner with the RC to win, but the data team can become a competitor instead of partner. RCs often have the final say and the control levers of profit that impact decisions:

The CRM team has control over email and push campaigns

The Marketing team has control over marketing spend

The Sales team has control over new deal structures

The Account management team has control over renegotiations

The Product team has control over new product features built and released

Another way is to have an assist target for the data team related to the RC. If the data team improves the performance of the RC, the RC still “scores the goal” but the data team “gets the assist” towards its own assist target. This may seem like double-counting, but data teams often work in a matrix way inside organisations. So think of it more as the same target achieved from both horizontal and vertical perspectives.

My first data role had the title “Strategy and Trading Analyst” - this role existed to find efficiencies in our trading. It seems strange to me that data is divorced from value-creation. It’s always been about the benjamins!

Making Assists

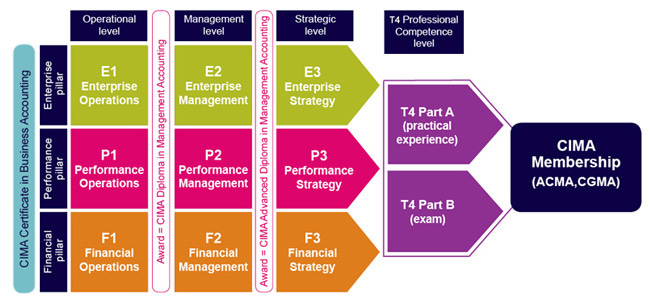

I’ve spent much of my time as a data practitioner, as part of a Finance department. I actually qualified as an accountant during this time (shhh it’s a dirty little secret). I got qualified partly so Finance couldn’t say my data was wrong without me pulling apart their journals and ledgers to find their own issues! One thing most people don't know about accounting qualifications, is that they are only one third accounting. The rest of the qualification is about business and performance management. This includes techniques such as variance analysis (a form of Root Cause Analysis), understanding how to calculate and interpret key performance metrics and how to act and make recommendations based on these. In hindsight, it's a strong grounding to be a commercially impactful data person.

I qualified during my time in the payments industry, where I held a few different data roles. The first couple of these roles would now be considered Analytics engineering roles. In the first, I created data models to enable consolidated customer reporting in order to understand the full value of our customers. This allowed us to know which parts of our portfolio were the most valuable, in turn enabling our CXO to make better informed decisions about strategy, product development and pricing.

I then was part of a small team (me as Analytics engineer and one PM) to build a complex cost data model. I used MSSQL stored procedures to process the data in chunks to avoid our server running out of resources 😅. The model allowed us to understand this cost at a finer grain than ever before. This enabled us to then see portfolio profitability in a new light, triggering a large re-pricing activity to recoup cost from loss-making activity. The money saved = money made was in the millions of dollars. While this was a painful message for some of our RCs, the impact of the project was well-understood throughout our company.

This project was a natural segue into my role doing Pricing Analytics. In this role, I led a team to build things like price regression curves to inform and build pricing policy. We also then built a system which forecast cost and profit, including Root Cause Analysis of why any forecast or budget was different to actuals. This analytical system was an early warning system against future losses, and helped our business save tens of millions dollars by passing on margin impacts from cost changes to our customer base. It also had the ability to execute re-pricing campaigns on this basis. Call this reverse ETL/data activation, if you will. We also had incremental financial targets to deliver, that we had to lead on. Over the three years since the system was built, we had to deliver $600k, $1.5 and $3m of extra profit to the business.

We delivered those targets and more each year, but it was in partnership with our commercial teams. They “scored the goals” but we “got the assists”.

I then moved back into a more general data role in a FinTech lender. I built my first, and so far only, ML model here, to predict lead conversion; it was deployed, so I like to say I have a 100% ML model deployment record! We had an acquisition channel of lead-generating partners. They would provide us with a stream of leads and APIs to purchase the leads. We would be competing with other lenders to purchase the leads, which would typically cost around $100 each. Despite these leads being much higher intent than paid marketing or SEO, they had a conversion rate worth optimising due to this high cost.

I clearly remember my kids being ill on the weekend I managed to get a raw dataset to train with. My wife and I were sleeping in shifts to deal with the vomit, so I used my shifts to get the feature engineering and hyper-parameter tuning done! I finally had a stable XGBoost classifier with high enough AUC, and our engineering team deployed it into production.

As the number of leads were quite high I could rearrange a weighted average conversion formula to approximate the increased conversion. With this increased conversion and a constrained budget for the channel its easy to calculate extra profit each month...

The Lead Generation part of the marketing team achieved a higher conversion rate and was attributed a higher share of profit ("scored the goals"), but I got to "make the assist" with the model I built.

The projects mentioned above were the bigger ones in my career, but there have been many more smaller ones, always aiming to deliver those assists for the RCs I worked with. This was by whatever data means necessary:

a shiny ML model

actionable reporting

business partnering

analytical research to guide company strategy

or even building a whole automated system

This is why I find it hard to understand why people say data doesn't drive money. This is my background in data... It's always been about the benjamins (or alans). The point of the Modern Data Stack, unbundling, rebundling, data app marketplaces… is to accelerate an organisations ability to improve performance through data, and to offer near limitless scale.

The complex cost model above used to take me between hours and days to run on MSSQL server, which massively slowed down our iteration cycles. Had I had Snowflake or BigQuery it would have taken minutes on simpler code that didn’t have to chunk. If I had dbt I could have replaced the stored procedures with a collection of models. This would have saved time when one step failed, and also allowing the system to run in a multi-threaded way. We actually weren’t able to store the full granularity of the data the cost model produced, as we had such horrible storage constraints. We had to aggregate the data after processing and discard the full data.

The same technical limitations had to be overcome with the forecasting and RCA system, which used the same stack. We had to use Excel as a front-end as nothing like Streamlit existed for making data apps. We had to build our own data activation as there was no Census or Hightouch to use. The Modern Data Stack would have enabled my team to deliver profit in weeks rather than months or years.

We can't be on the bench

I've been fortunate enough to come up through PE businesses that were profitable and focused on improving profits ahead of IPO, then subsequent data-rich tech environments. The space has usually been available for someone hungry to drive the business forward with data.

Since moving into my role at Lyst, which has a self-serve data approach, I have focused more on engineering than business impact. This is partly because of the adoption of new tech and pace of product change requiring Analytics engineering. It’s also expected for the business to drive their own impact from the data provided to them. This does divorce data from value. Towards the end of my time there, I wanted to be more commercially impactful as is necessary for me, and is in part why I left.

Being commercially impactful is not a blocker to good data culture: it supports it. On the journey to achieving this data-driven commercial impact, data people will build some of the cultural norms Anna discusses in last week’s AE roundup:

norms around data testing, quality, control, access

shared language around describing the business

values like accuracy, timeliness, reproducibility

shared self-expression like jokes, memes and other cultural references

If your company "cares tremendously about the value you create", they will allow the budget and space to achieve this data culture. If the work you do is valuable, it’s in production, meaning you achieve bullets 1 and 3 because you need them. Bullet 2 is greatly helpful in aligning with the RCs you’re assisting and also easier to achieve as you are closer to them. Bullet 4 becomes shared throughout everyone who benefits from your work - far more than just data people.

Data work should drive measurable value; it should have a commercial target. It’s more fulfilling if it does. This is evident in my lack of fulfilment without the direct connection to value, having had it before. We need to help our organisations succeed to feel fulfilled and be valued as data practitioners.

David this is gold. Please come cover this on our podcast - The Distributed Truth. Evan at Syncari.com

Pedram Navid was last week's episode. Recording Richard Makara later this week.