In this post, I will refer to Approach 1 and 2 metrics layers, as described in my previous post:

from metricalypse_soon import approach_1, approach_2

TLDR: Approach 1 metrics/semantic layers don’t allow the relational model to be defined in the layer: they expect single wide tables to be the foundation for any given metric. Approach 2 does allow the relational model to be defined in the layer, with the way tables are joined to be defined here - I believe this is a more powerful approach, allowing for more complexity to be captured and it also allows for single source of truth more readily.

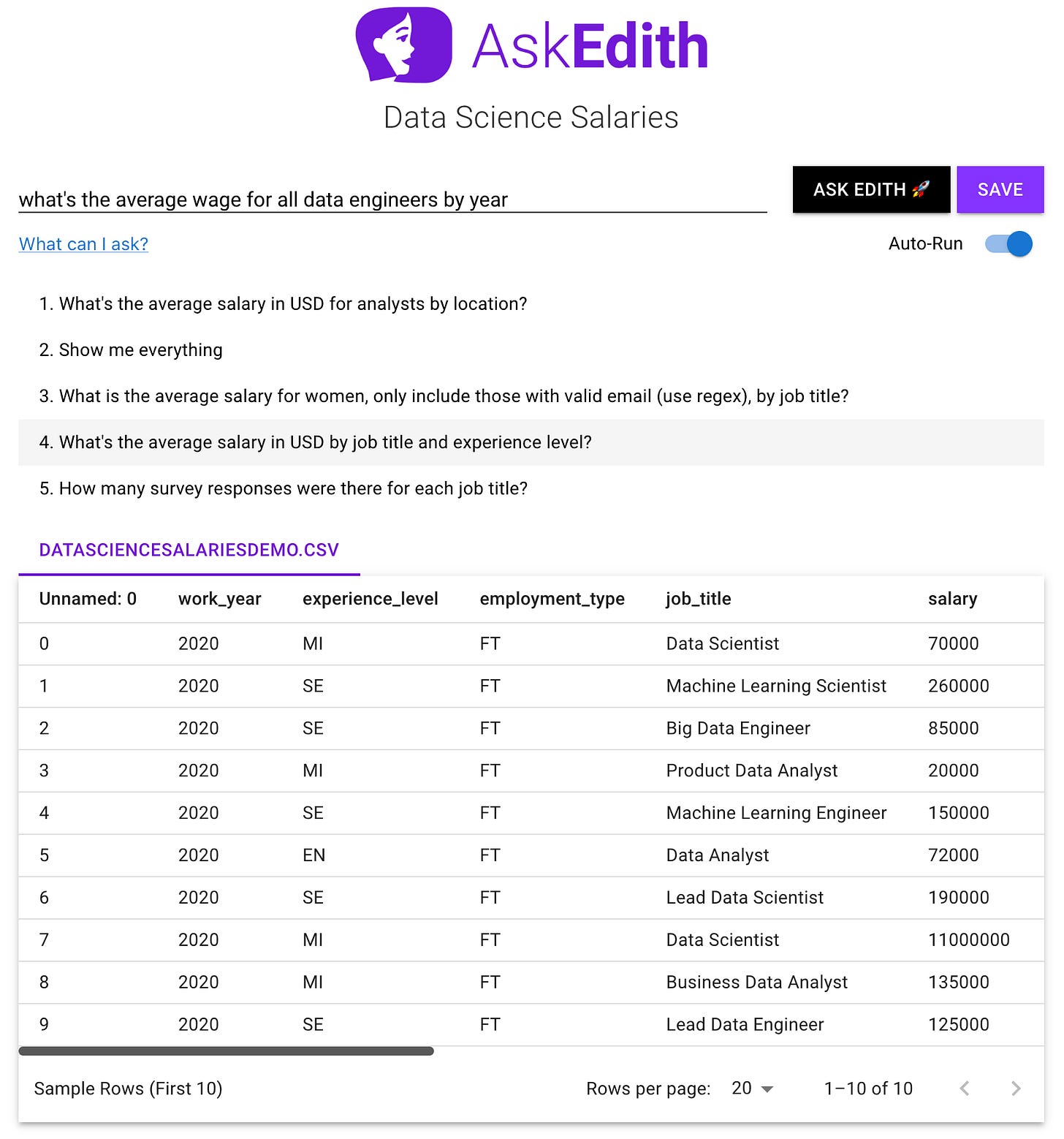

I recently spoke to Jared Zhao about AskEdith, which is the company he has founded. I gave the product a spin and I did a live onboarding, plus brief trial session with Jared watching on Zoom. I get asked to look at a fair few data products - what was particularly interesting about AskEdith to me, was the idea that I didn’t really need to define my metric or semantic layer to get answers. Is a well-made data model with no additional semantic definition on top sufficient to allow anyone to get answers from it?

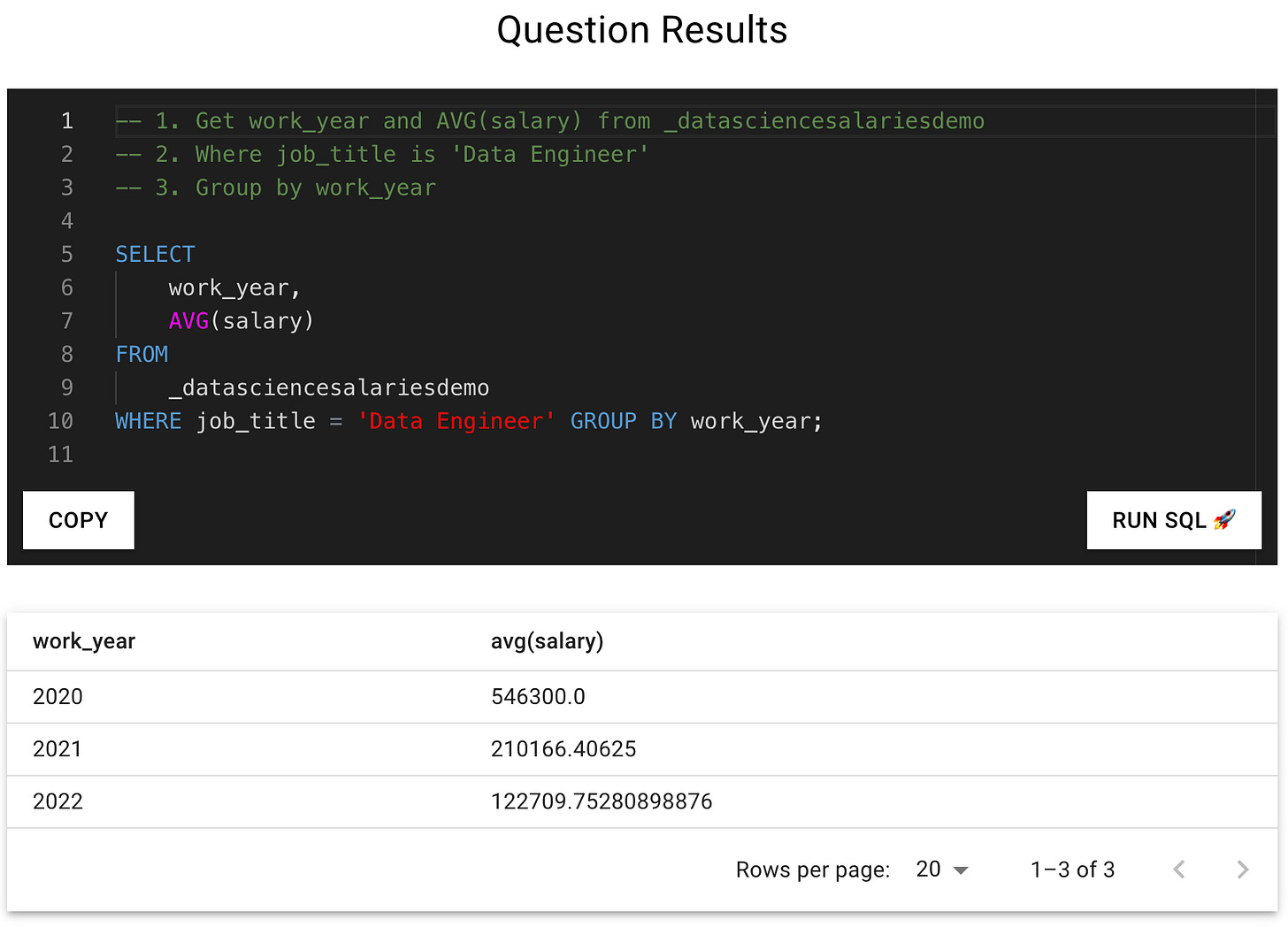

AskEdith uses GPT-3 to convert a text question asked by the user into a SQL query, to run on the dataset or model exposed to it. It doesn’t require a metric/semantic layer in order to function. As you can see above, I asked a question which requires an aggregation (average), filtering by job_title and grouping and sorting by year. How does AskEdith do with the question?

It actually does do pretty well - the one thing I would have wanted it do that it didn’t is to find all the data engineer-type roles. As you can see above, there are roles such as “Big Data Engineer” and “Lead Data Engineer” that I would have wanted to have been included in the results. I wanted the query to have had the filter:

WHERE job_title like ‘%Data Engineer’

This would have captured all or most of the roles I was targeting with my question (perhaps missing roles like “Data Platform Engineer”). However, you could argue that this is a problem with the data model, as there isn’t a good taxonomy of roles which group all of the similar roles together. It’s possible that the algorithm, seeing that a lower cardinality column with fewer roles and many that have exactly the term searched for, is a better choice.

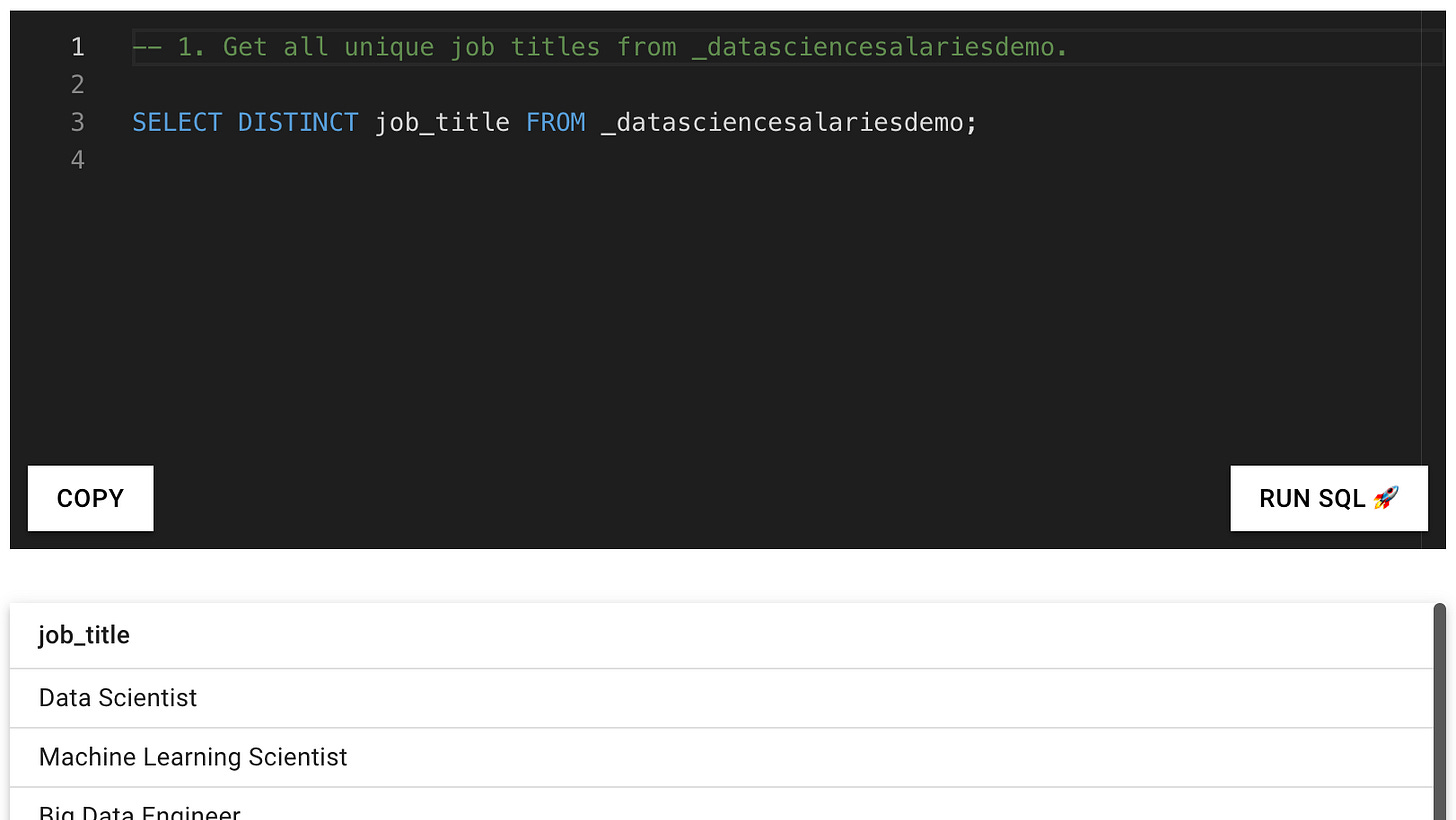

Let’s take all the job titles and make this grouping as an additional csv file - AskEdith correctly runs a select distinct on job title when I ask: “Show me all the job titles”. This is beginning to feel like Midjourney with data!

I’ve exported these with the original dataset and now coarsely grouped all the roles into Data Scientist, Data Engineer or Data Analyst… please don’t read anything into this - it’s just an example!

Now let’s see if AskEdith figures out that it should join to job_title_grouping and take the job_grouping instead of just the job_title, by asking the same initial question in this project:

As you can see, AskEdith does well with answering questions on a simple data model. These are the kind of questions that many stakeholders ask in tools like Looker and Lightdash, by dragging fields around the interface, or in Thoughtspot, Veezoo and Metabase, by asking natural language questions that these tools then guide to fields and entities mapped in their metrics/semantic layers.

I did also try using AskEdith on a much more complex relational model (Fivetran Hubspot data) and it struggled where there were many tables which had similar keys. It would revert to using just one table for a query, where it should have joined another to find additional details. It still performed really well on one table, however.

Approach 1 semantic layers are effectively mappings of metrics to tables, where one metric can only come from one table. AskEdith essentially makes them redundant. Why bother taking the time to maintain them, when an automated tool negates the need? You’d be better off making sure you had a very clean data model with good taxonomies and normalisation. Your stakeholders could then pretty easily use a tool like AskEdith to find out what they wanted. Bear in mind that AskEdith is one of the first of these kinds of tool based on GPT-3, and it’s far from being near a final product. GPT-3 either has or will be superseded many times in the near future - this type of tool will go from strength to strength, whereas BI tools relying on Approach 1 semantic layers are about as good as they are going to get.

AskEdith struggles with unclean relational models, and even some decently-structured large ones, due to ambiguity in the data model. Approach 2 semantic layers are more complex and still provide value - you can, but perhaps not should, overcome some of the ambiguity with the data model in the semantic layer. Perhaps in time, with enhanced training and stricter modelling, a tool like AskEdith could do well enough with more complex data models to not need a semantic layer, but we’re not there yet and I don’t think we will be for a while.

Since I wrote this post Jared followed up with me to talk to me about a new feature: “account-specific AI models” which could solve the issues I had with the more complex model. I’ll try this out in the coming weeks and post about what I find.

Awesome article David! It’s great to see how AskEdith performs on real-life data.

Like you, I like “Approach 2” (both in the context of natural language and in general):

1. It’s actually harder for data/analytics engineers to do Approach 1 than Approach 2. Building “one big table”s with all the right joins and granularity is tricky. There are so many ways of slicing and dicing data, so it’s nice to let (for example) LookML generate joins for you.

2. For at least the next few years, Natural Language won’t be the main way we interact with data. It’ll exist alongside “traditional” BI tools. And those tools all have their own definitions of metrics and dimensions (basically, a semantic layer even if it’s not an open one like Transform or dbt’s). You really want them to match.

(Disclaimer: not exactly an unbiased perspective, lol. I’m building Delphi[0] which helps data teams who struggle with self-service by adding Natural Language capabilities to the modern data stack. And it very much takes Approach 2.)

[0] https://delphihq.com