Before

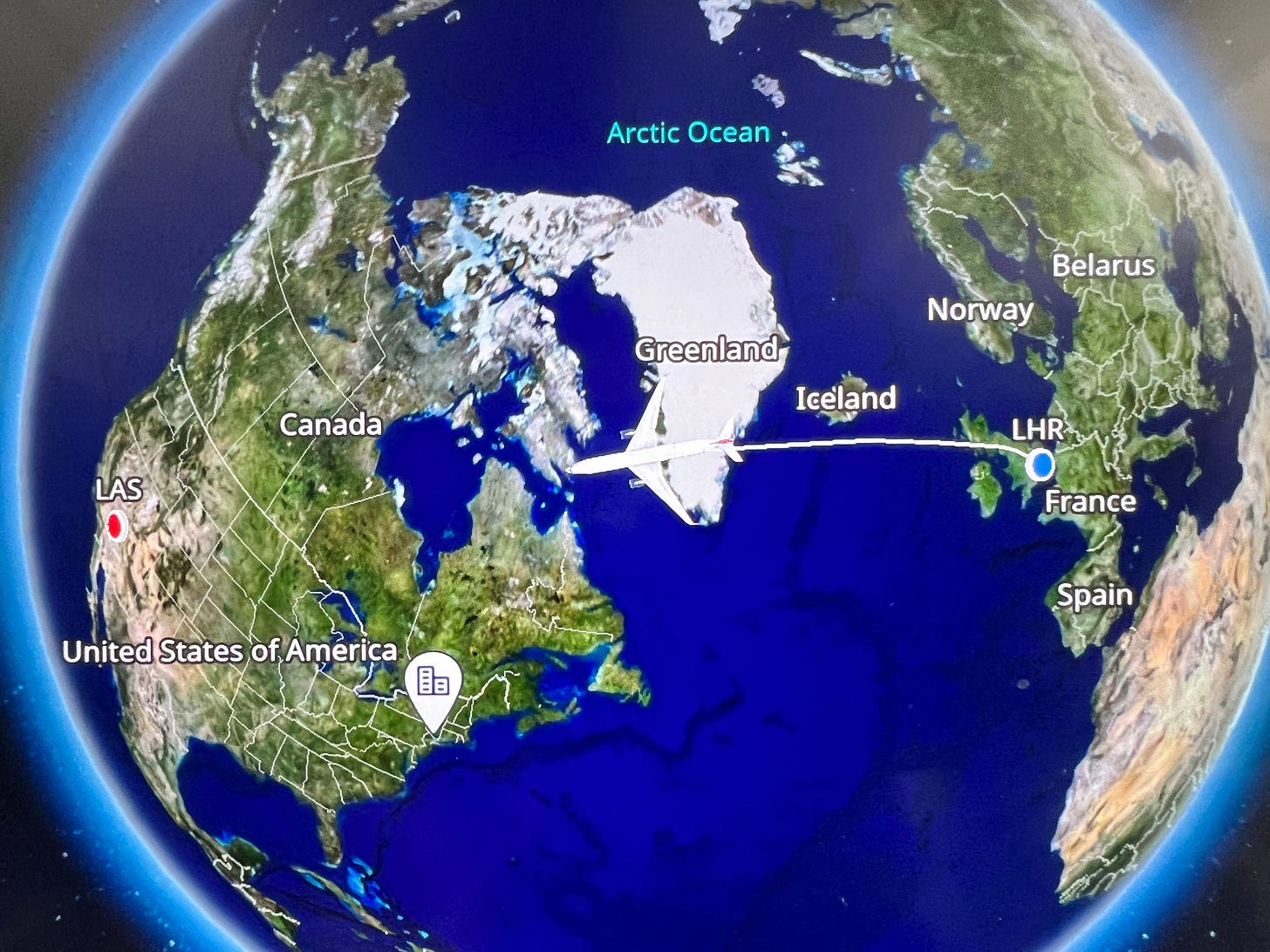

As many of you will know there is a big thing in data going on in Las Vegas this week - Snowflake Summit 2022. It's the first large in-person data conference I've attended since Snowflake Summit 2019 in London. It's also the first time I've flown in 7 years! Between having small children and the impact of Covid restrictions, I have happily avoided airports and planes.

The global circumstances are challenging with Ukraine, VC caution and public market performance. It's a relief to be going to meet so many great people in our industry, in the context of future innovation in data. There are many people in our industry who I've spoken to for years but never met in person, or even spoken to synchronously. I'm looking forward to seeing as many of you as I can!

Benn recently posted about the opportunity for a company to make a "complete data stack". I asked why not Snowflake? They have a large enough org to deliver a lot in terms of engineering, even if this may not be the case for execution.

Relative scale also comes into question. For the rest of us in the modern data stack, Snowflake is a behemoth with 1bn USD revenue and the scale of org. For the tech industry at large, they are a large fish that could easily be swallowed by a whale. It doesn't necessarily matter that Snowflake's revenue from data use cases (100% for them) is possibly the largest in existence - if one of the bigger vendors in the space decided to buy or beast them, they could easily be outspent on R&D for years to come. Some of the cloud vendors in particular have such a strong distribution network that even having a product that is nearly as good as Snowflake, is enough for them to stay on the menu.

I remember the feeling at Summit London in 2019; Snowflake felt unstoppable. Their sales reps were quoting that they were winning two thirds of their business from AWS Redshift orgs. I remember an AWS rep being happy for our company to move off Redshift to Snowflake, just as long as we didn't go to GCP! I also remember talking to a few of my ex-colleagues at the 2019 Summit, who had spent years working on a Hadoop project with Hortonworks (it failed); I said I couldn't understand why anyone would choose Hadoop any more with Snowflake available, much to their consternation. However, with a few exceptions, the release of substantive new features from Snowflake, since I deployed it at Lyst, has not happened. Snowpark GA with Python is relatively recent and things like the Query Acceleration Service (allowing users to be free from having to t-shirt size warehouses for queries) kept being pushed into the distance (it's still in preview). It started to feel like Snowflake was afraid of releasing features that would make their own product more efficient; their consumption model rewards them for inefficiency...once you're judged by revenue it's never enough.

BigQuery has continually kept a strong pace, releasing new features over the last few years. Seriously, read their release log... it's impressive. They are eliminating their weaknesses with purpose and acceleration. I've used it in the last two orgs I've worked in and believe it suits a smaller organisation better than Snowflake. It truly doesn't have any dials and buttons to optimise performance (beyond partitioning), you don't need to worry about cluster size, it's free to use to a certain level every month... (if your data is small it may end up being free for a long time). It just works. I would recommend any org on GCP to consider trying it over another DWH first. Google could well be the right org to build the "complete data stack" in the cloud. There are great synergies with them building this infrastructure and their internal workloads, which are more data heavy than Microsoft, Amazon, Oracle and Salesforce. Having used BigQuery in an Alphabet interview process, I can be fairly certain they are dogfooding their own product, too. What better method for BigQuery PMs to learn how to improve their product, than by having the whole Alphabet group request features and bugfind?

Jordan Tigani, who worked on the BigQuery project, has gone on to found MotherDuck, which is not the first and won't be the last hosted DuckDB startup. Clickhouse and Trino are also experiencing similar activity.

It feels like Snowflake's reticence to innovate on query efficiency, to preserve revenue growth, has invited innovation and competition from others. What's great is how most of these are based on open-source too. This is not even mentioning Databricks, who are looking increasingly like a strong option for an advanced data org. I just attended the London dbt meetup, where someone there mentioned moving from Redshift to Databricks - up until that point I hadn't heard of anyone doing this.

Snowflake's multiplier of revenue to cap has declined since their original record-breaking IPO levels. All of these circumstances make me feel that Snowflake needs to impress with real innovation this week. Not more use cases for the same tech - genuinely new tech enabling genuinely novel use cases or powerful gamechanging new features.

After

Did the announcements meet my expectations? In short... yes.

There were a number of interesting and important new features. Some of the things I've described above around making their existing analytical query engine easier to use and more efficient weren't touched, but they've decided to build new things and expand their footprint instead. This is a valid commercial strategy, as it allows growth, while protecting and augmenting their analytical query engine business.

Unistore was announced, which makes Snowflake a HTAP database. This is brilliant, as it enables real time analytics without needing to ship events or do any EL - the analytics dataset is the OLTP dataset. It can potentially allow a customer to just have Snowflake to store state. How much this will cost is yet to be seen and it may only make sense for large use cases where Aurora or AlloyDB would make sense currently, but it's still a great new use case.

Iceberg tables address a key ask from engineers over the last few years: being able to programmatically access Snowflake file storage. In truth it is Snowflake operating on shared file storage, but the effect is the same.

Native data applications where anyone can develop an app and start monetising it in the Snowflake marketplace - this opens a whole world of possibilities, but because all applications are run on a customer's Snowflake resources, these possibilities are safe. I'm thinking of making a data app just for the fun of it and also to experience the process.

Search optimisation on new data types, 10% efficiency boost on AWS, enhanced streaming, enhanced materialised views, large memory instances for ML, streamlit sheets for use directly in Snowflake... Snowflake have delivered in a way that I didn't expect this year.

In addition to the things I've mentioned above, they released some functionality to increase their reach into legacy stack enterprise customers (support for on-prem data, SnowGrid to enable multicloud and ease of data migration and a few governance features). As I've said before and Benn alludes to in the tweet above, there is a huge part of the market tied up in legacy stacks that they struggle to move away from. If Snowflake can better help even a % of this huge market, it will unlock growth from large enterprise customers with deep pockets, who by this point may be envious of less constrained orgs enjoying cloud SaaS tools like Snowflake.

As you can probably tell from my tone and my low expectations before the conference, I'm genuinely impressed. I hadn't expected to be.