Just in time Analytics

A move towards analytics driven orchestration

It’s conceivable that in the not too distant future, most analytics will be event driven and real-time (or at least near real-time). If all sources, even third party platforms, are able and expected to write events in real-time to shared storage or data warehouses, then this will become possible. Many data sources (CDP, CRM, payment processors…) are currently able to write events in micro-batches to customer cloud storage or data warehouses already, and increasingly we’re seeing ELT providers support streaming use cases and CDC streams. This, in conjunction with an increasing number of real-time data warehouses like Rockset and Materialize, are showing the promise of real-time analytics becoming normal in the future, with SQL still being the API enabling broad access.

However, we’re not there yet, and there are still things we can do to improve on how batch analytics runs.

A very common model today is that a tool like Airflow/Dagster/Prefect orchestrates analytics pipelines. The orchestration tool will trigger and ensure that all extraction jobs are complete, before then triggering transform jobs which use the data that has just been extracted and loaded. It very much makes the orchestration tool the driver in the analytics pipeline: it pulls the data from sources, then pushes this data to load it into the data warehouse and then further pushes the data on with subsequent triggers to transform jobs.

What is suboptimal here? The main problem with this flow is that the DAG of the orchestration tool has become a bottleneck; a number of these EL orchestrator tasks will occur together in a group before the Transform node can then be run on the output of them. Different tasks in the group will finish running first, and therefore become more stale, while the remaining tasks finish. The DAG of the Transform node isn’t allowed to proceed as fast as it could because it’s waiting for a collection of tasks to finish in the orchestration DAG.

What if the EL tasks could be run exactly when needed? If you think about the sources in a dbt DAG, these only need to be updated at the point the next node/s need to run.

dbt already has the concept of pre-hooks on models, which could be used to make a source update ahead of a model run. The issue with this approach is that it makes a source, which is used by many models, troublesome to update as it’s unclear which model should have the pre-hook. You end up having a staging model, with the pre-hook to update the source, which all descendent models in the DAG depend on. This approach is probably fine, but if the source itself could have an update trigger defined with it, to be called when the source is first used in a dbt run, this would be even better and cleaner in code.

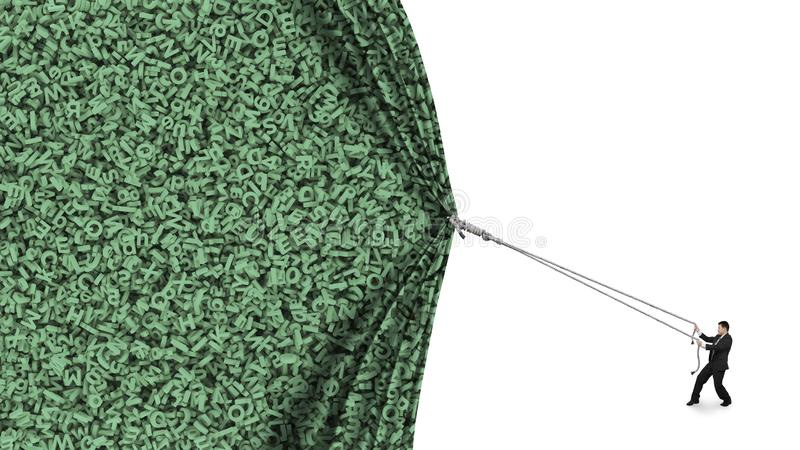

If all sources for a dbt DAG could be called just in time like this, then the need for an orchestration tool ceases; you can simply run your dbt jobs on the schedule desired. This schedule may be driven by the cadence of source updates, eg sources which only have new data once a day. The config of the source update trigger, mentioned above, could include this known cadence, and therefore prevent a source being forced to update when no new data is available. This would make the dbt job run faster where it is scheduled at a higher cadence than some of the underlying updates of sources. This allows for the fine- grained Transform DAG to drive EL, its shape being more like a tree and root system than a production line.

With the metrics layer and real-time analytical stack, we can take this one step further and do the whole of ELT (EL being continuous and T driven by the specific metric called) at the point a metric is called. In the tree metaphor, it’s like being able to form a fruit at the moment it’s reached for.

Simplification is good, and not having to manage an orchestration tool would make analytics engineering even easier to learn and faster to accomplish. Especially when there are no downsides to data freshness and quality (dbt tests could be run on sources after updates are finished). These are actually both improved: data is fresher because sources are loaded just in time, and it’s easier and richer to write tests in a transform framework than in an orchestration tool.

Thought, debate and dissent are welcome as always!

I like this idea of a central orchestrator, but wouldn't a pre-hook just move the bottleneck you describe from the realm of Airflow to the realm of dbt? The pipeline still has to run and fetch the data, it's just that now dbt would be calling the pipeline instead of Airflow?

An alternative approach is similar to Meltano's, where _each_ EL pipeline can run the affected dbt transforms right after loading. So rather than doing one Big Batch of dbt transforms, you could do microbatches of transforms just after loading, a la `meltano elt from-salesforce to-snowflake --> dbt run -m source:salesforce+`

The big secret of analytical query optimization is that you do it ahead of time as much as possible. Pre-aggregate, pre-calculate and preload so that runtime analytics are fast. If your use cases are ad hoc analytics and data discovery or data science experiments then the slow performance of data JIT might be fine. If it’s streaming data then by definition it has to be running continuously, also doing precalculation and preaggregation as much as possible. Otherwise your query will take longer than the data takes to arrive and cease to be real time.

I like the architectural approach of removing the Airflow or other orchestration bottleneck because pipelines can be loosely coupled, self describing and self-executing, but usually you would trigger based on arrival of data (push) rather than when it is requested by analytics (pull). It would certainly be cheaper as far as compute goes to do it JIT, but a hell of a lot slower for users and not a great Ux.