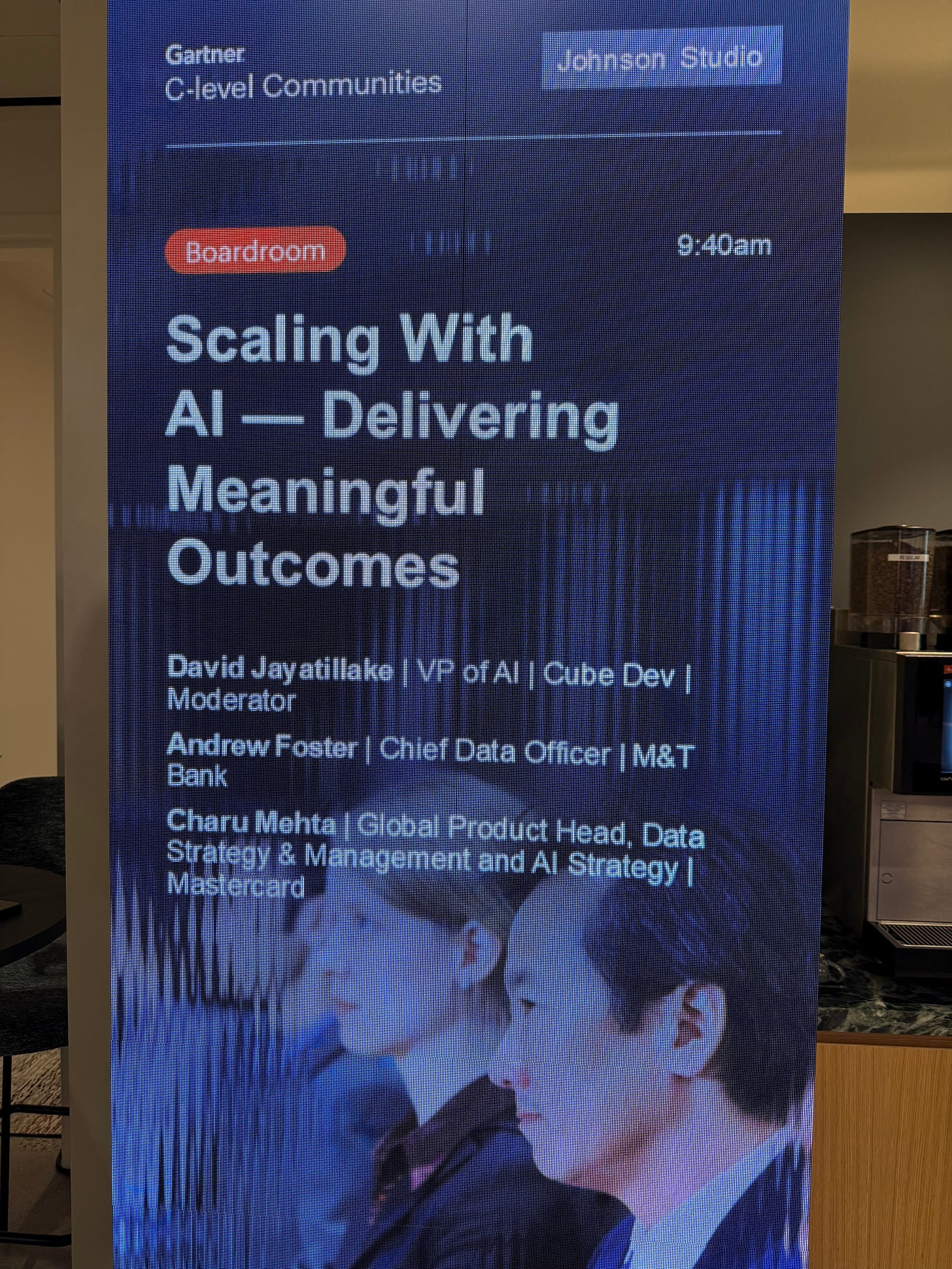

Earlier this week, I participated as the moderator for one of the boardroom discussions at Gartner’s CDAO C-level Communities event.

It was quite a different experience from speaking at a conference, which I have done a few times before. You can’t really plan how the discussion will unfold.

You can have a set of questions to guide the conversation, but ideally, you would prefer the discussion to flow organically without too much intervention from you and your discussion leaders, who are there to help steer the discussion if necessary.

There were long periods during the discussion when various attendees were bouncing ideas off one another and allowing others to participate… I didn’t want to intervene; they were doing great on their own!

What was truly challenging was not proposing the solution directly. The topic revolved around scaling AI usage in organisations, clearly linked to data, considering the conference audience. As you know from many of my previous posts, I believe that text-to-semantic layer is superior to text-to-SQL. Scratch that, I know it is. I often found myself having to bite my tongue when attendees detailed some of their approaches to enabling natural language access to data. Instead, I had to guide them towards considering the potential drawbacks of lesser methods.

The conference audience was distinctly different from any others I had attended before. Snowflake Summit, Big Data London, dbt Coalesce, Databricks AI World Tour… whenever I have been to these, I have always recognised familiar faces. Not here - these folks aren’t early adopters, they are in the long tail of the late majority. Microsoft’s dominance in large regulated enterprises was very evident. If members of this audience were even permitted to use AI at work, it would be Microsoft Copilot without exception.

It truly struck me how much of a disadvantage this can be. Some people spoke of how poor Microsoft Copilot is at transcription during Teams calls. Once more, I had to bite my tongue not to mention how effective tools like Shadow and Granola are for this use case. After all, they would never be allowed to use them, so what’s the point in mentioning them at all?

It is almost always true that startups and companies that focus on creating the best tool for a specific use case will have superior functionality compared to those who merely add this as an additional feature to their existing product suite.

There is significant caution among regulated industries such as Banking, Insurance, Healthcare, and Pharmaceuticals regarding the use of AI. Many are still in the phase of evaluating it from a security perspective. Some attendees worked in unregulated industries, and the difference in progression was stark. New entrants to regulated industries, who can afford to risk non-existent business in order to bring AI features and services to the market, may have a significant opportunity to disrupt.

The other side of the coin, however, is that these large regulated enterprises have huge resources to wield. They have allocated their AI strategic budgets for 2025. Moreover, they have numerous teams they can assign or reassign to explore AI. If the top-level leadership in these companies wishes to advance with AI, they will, although it may simply take longer than in other sectors. Additionally, regulated industries are shielded by this regulation, making it difficult for new entrants to disrupt them; thus, they can afford to proceed more slowly and assume less risk.

I remember attending DuckCon, where Cube and Crunchy Data both had small posters to display and engage with people who were interested. I never expected an acquisition of this magnitude to happen a mere 5 months down the line! Congrats to the Crunchy Data team!

I must say that I’m a bit sad that I never got to experience using it before the acquisition, and I doubt that they will continue to use DuckDB as the OLAP query engine of choice now at Snowflake.

The year of acquisition continues - Airflow is being kintsugied back together and having a warehouse put in around the back.