sqlmesh init duckdb

Let's go fly in the blue sky

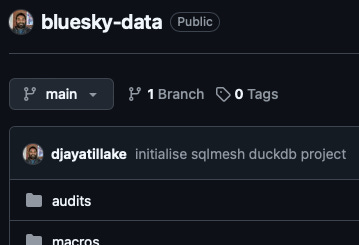

I’ve created a public repository called bluesky-data, here - feel free to follow along as I work on it and even to contribute to it if you like.

It was as simple as checking out the repo to a local folder in Cursor, opening a terminal, creating and activating a fresh python venv, upgrading pip, installing sqlmesh and running sqlmesh init duckdb. Much less hassle than getting sqlmesh to work with an existing dbt project.

My end goal with this project is to build a data model using the Bluesky data available via Jetstream (shout out to Jake Thomas for making this so easy), but maybe also using dlt in sqlmesh to get data from the Bluesky APIs which use cursors to paginate, preventing their use in DuckDB SQL alone.

If you think about what the contemporaries of these two types of data would be in a real data project - the jetstream feed is like a product analytics feed of events that is real time but limited. If I make sqlmesh models that don’t materialise as tables, downstream of models which consume from the Bluesky jetstream, then I can have real time data availability on this source.

With the data from the Bluesky APIs, I’m looking for a more complete picture up to the point I run my sqlmesh models. I will definitely want to materialise the raw data from the APIs as a table. It’s much closer to a batch ELT process that you would run for non-product analytics use cases, where completeness and longer history are more important.

It would be cool to collect some post and post interaction data, but I don’t think it’s feasible to query enough of it quickly enough from the Bluesky APIs to make it interesting. I will most likely stick to using graph data from the getFollows and getFollowers endpoints, along with the jetstream.

After reading the guide on using dlt with sqlmesh, I have realised that it actually makes more sense to start with dlt and then initialise sqlmesh inside the existing dlt project. It’s possible for sqlmesh to pick up state from an existing dlt pipeline:

SQLMesh will also automatically generate models to ingest data from the pipeline incrementally. Incremental loading is ideal for large datasets where recomputing entire tables is resource-intensive. In this case utilizing the

INCREMENTAL_BY_TIME_RANGEmodel kind. However, these model definitions can be customized to meet your specific project needs.

So, I have reverted my sqlmesh init duckdb commit, given there is no actual work to save, and will dive into dlt tomorrow.

Super cool. I might try sqlmesh + duckdb for twitch analytics