My Obsidian community plugin repo is now at 13 GitHub stars! Woohoo!

Vibe coder

I have mentioned before how I have continued using Windsurf to build more things, since my success using it with sqlmesh and dlt.

However, I’ve had more input about features that users would like to see, and I’ve made yet another release.

So this is what it feels like to be the maintainer of an open source project. People ask for stuff and mostly (no one has yet) don’t contribute. Yet it’s still satisfying to hear what they want and to build it. It’s even inspired me to come up with some issues of my own - some new features to extend my fledgling plugin.

What if LLM tagger could also generate links for docs so that you couldn’t just search your docs well, but also see how they connected? A second-brain synapse plumber. How would this work? I’d essentially need to trust the LLM to choose which docs to link together. Alternatively, I’d do something like vectorise all the docs in my vault and then choose to link the ones that had the most similar vectors. Could I ask the LLM to do it in the prompt, explain how Obsidian linking worked, and see what happened? Perhaps I should try both of these methods out. Getting the LLM to do it is a much lower lift, so I should probably start there. It won’t require adding any packages like adding a vector database would.

I was also thinking of adding something more mundane. I used to use an app called Pocket to save articles and blog posts I wanted to read later and possibly offline (I used to spend a fair bit of time on the London Underground). What if I could get LLM Tagger to take a URL, open it in a browser or otherwise scrape the content, summarise it, tag it and link it to other docs in my vault, storing the content in an appropriate location? I know this sounds terrible in that it suggests not reading anything, but it may allow a user to focus on reading the content most relevant to their work and interests.

I really struggle to read all the content that I should, in theory, be reading to keep up with data, AI and other interests. The reason people have so many Chrome tabs open is that they want to read something but don’t have the time to at the moment - they could know which of those were the most relevant. It’s like a content funnel: sounds interesting > summary is interesting > has many tags related to things I’m interested in > is very linked to my notes and other parts of my second brain. As you traverse the funnel, you commit more time to taking the content in, for content that makes it the whole way through… they are probably the ones you should really pay attention to and read in detail.

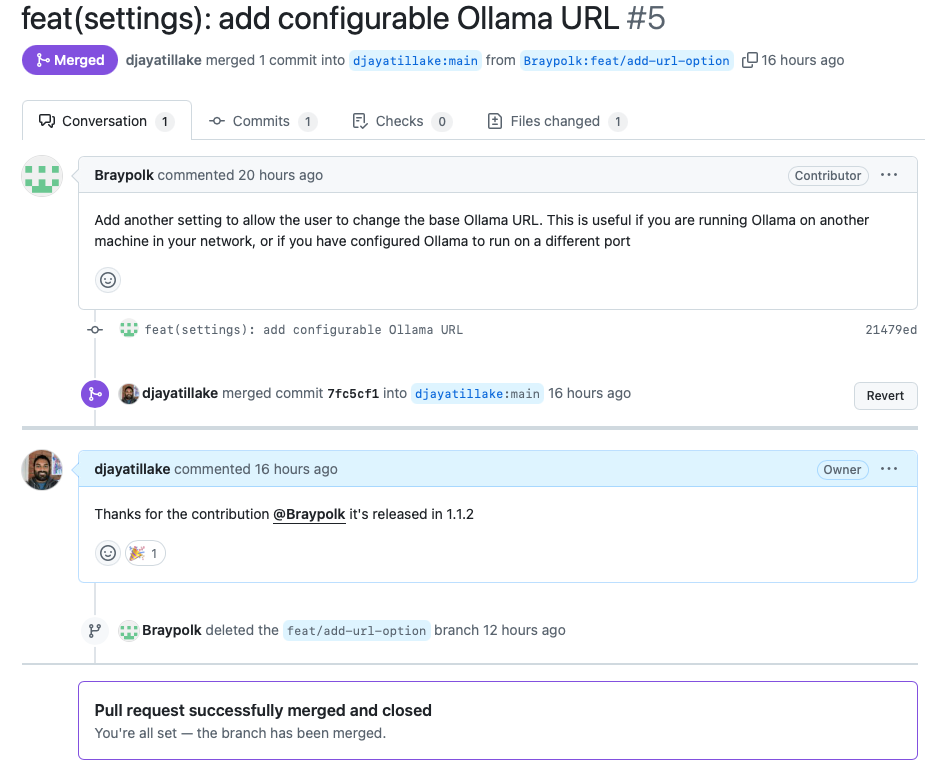

Since starting to write this post, I’ve had my first PR for the repo! True open-source legitimacy. A little change to allow for a configurable URL for Ollama, in case the user is running Ollama elsewhere, etc., but still contribution from someone else!

Merged, and published in a new release for all to use.

There is a lot being written, both positive and negative, about vibe coding right now. I think I agree with this post by Manuel Kiessling.

While I have not yet found the perfect metaphor for these LLM-based programming agents in an AI-assisted coding setup, I currently use a mental model where I think of them as “an absolute senior when it comes to programming knowledge, but an absolute junior when it comes to architectural oversight in your specific context.”

Manuel continues to talk about how this makes current senior software engineers best placed to get the most benefit out of coding with LLMs, but they aren’t the only ones with good architectural knowledge. While I’m not a software engineer, I am an able data and systems architect. Perhaps this is why I can benefit from building with LLMs to a high level, too.