Agents

Software will eat the world, but agents will eat software

In December, when I worked on my SQLMesh advent series, I used Windsurf as an IDE, but within Windsurf was Cascade. I didn’t think about Cascade as an agent too much but it clearly is! You proactively ask it to achieve something and then it goes and does it, and it might require multiple steps, multiple files to be made, multiple dependencies to be handled and multiple errors to be overcome… but it tries to build something that works.

dlt windsurfing

Yesterday, I mentioned that I wanted to focus on getting some data from the Bluesky API endpoints using dlt - which involves learning how dlt works. You can follow along with my public repo here.

It was able to build a fairly complicated data pipeline, a transformation project in SQLMesh1, and then assist me in developing software to generate a semantic layer using AI from the gold layer tables within the transformation project. My inclination was to build software to do this at the time and use AI to help me build software that was able to repeatedly perform the same task.

sqlmesh cube_generate build part 2

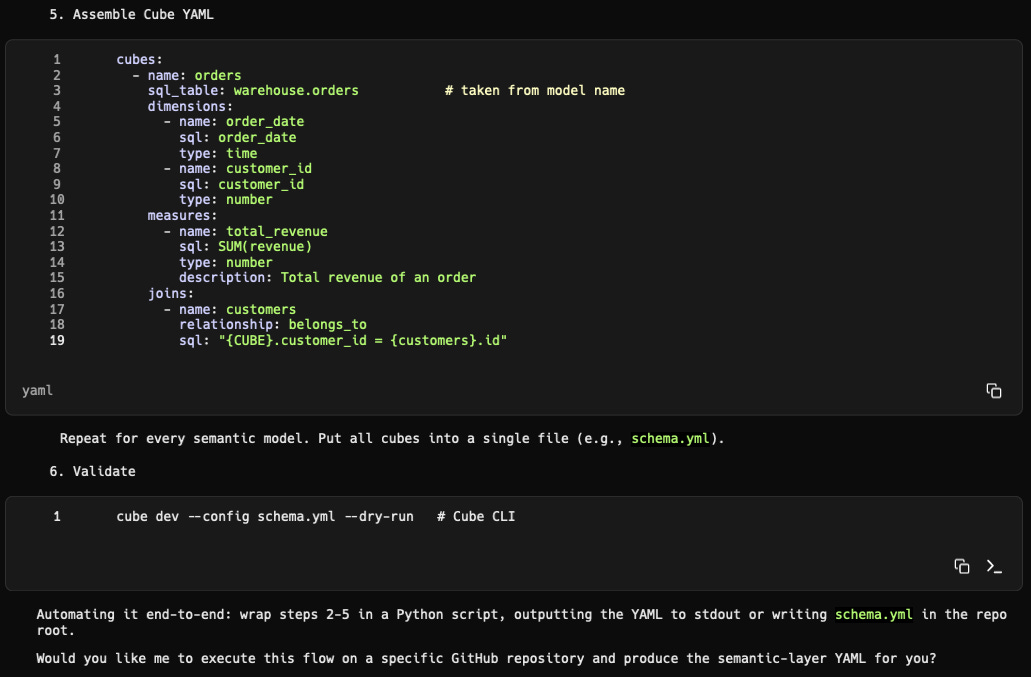

Yesterday, I got to a point where I got a CLI command to output JSON, which described the relationships between sqlmesh models and how fields were aggregated. This is the fundamental metadata required to build a semantic layer. When I set out to build this integration this week, I knew it was unlikely I would build something that deterministically gener…

However, even this software wasn’t deterministic; it depended on LLM use. What if I didn’t try to generate code at all and just tried to get to the output? Just ask the agent for what you want.

Recently, I had an experience of trying something like this that made me think this is now feasible. Warp is an AI-first terminal that recently released V2 of their product to much fanfare, and the core of the new functionality is the ability to build agents. In this instance, I used Warp to build my agent.

asked me to produce some content about recent releases to Cube’s new agentic analytics product - D3. However, one problem with a product that is moving fast is a lack of documentation. We have the code in Github and merged commits that show what changes have made it into prod, we have tickets in Linear that are pretty sparse on detail… we have some element of high-level strategy but not much else. Initially, we thought it would be best if Artyom filled in a Notion document with new releases that have been merged on any given day, and perhaps create a rough Loom or have the engineer who built it do so for a specific feature.My first thought when Artyom wanted this, and knowing the sources of information available, was to build something that harvested the information from Github and Linear. Initially, I was going to try using my trusty AI IDE of choice, Windsurf, to do this, just as I have done a few other times on this Substack. However, as someone who has been using Warp for a while to speed up using Shell and CLIs, I saw an opportunity to use their new agent features as a potential shortcut.

Reading just the merged commit names for the repo and the ticket contents wouldn’t have been enough to really know what changes have been made in any detail; you need to be able to read the code in the context of the overall repository and understand what functionality changes they make to do this. Until this AI era, trying to do this in an automated way wasn’t really feasible. But LLMs are great at reading code2, and new systems like Warp and Cascade are pretty great at indexing a whole complex repository to understand the context of the code changes, too.

I made a new agent tab in Warp and checked out the repo. As it turns out, I didn’t need to do this with Warp like I do with Windsurf/Cascade - it only needs the URL of the repo. I then asked it to summarise recent changes - it did a decent job but had more than just the D3 changes. So I asked it to filter to just the D3 changes - and it was able to do that well. I only wanted the last week’s releases, so again I asked it to filter to just these and it did it. This is about 10 minutes in.

Given how easy everything was with Github, I thought I would try using the information in Linear too, but I thought I would hit a snag here with more complexity. I asked the agent also to take information from our Linear project to blend with the information from Github to create the summary. It did some research for a while and found a Linear API which wasn’t rich enough, leading it to find the Linear CLI which was able to pull the information from our tickets. All it needed was for me to generate an API key which I duly did and it gave me a Shell command to store the key as an appropriately named environment variable, that I assume the Linear CLI expects. Just like that, it had got the information, found the Linear tickets referenced in the Github commits and taken the information specifically for them. Then it blended the information from Github and Linear to produce something pretty rich.

All in all, I spent half an hour making this agent. It will now run every Friday to find the last week’s releases in the same way, or when I ask it to. The half an hour included me showing Artyom how good it was and then copy pasting the output to Slack etc. This is the kind of use case that could have been a startup in the pre-AI era3. Now it’s just an agent someone made in half an hour.

I think there should be huge scrutiny of whether software needs to be built for a given use case or if an agent can be made in less than 1% of the time and cost. VCs should look at every pitch and ask themselves whether the idea needs to be software or could just be a cheap and cheerful agent. I don’t think it’s reasonable to invest in a business whose product is an agent that can be built very quickly4. There is no moat. Every single customer or user is a potential competitor - who is better-placed than a customer to build their own agent tailored to their own minimal set of needs?

A lot of supposedly advanced features for agents, like tool use and memory etc, are just one advancement in LLM tech away from being baked into the LLM and becoming standard agent capabilities. You could see this with tool use in the example above, where the agent just picked up the Linear CLI and started using it. I didn’t even see the Linear CLI commands it ran - I probably could have if I wanted to, but I didn’t. The output tasted good. I showed it to Artyom, and he said it was right.

People used to make handy little Excel sheets that could do things for them, limited to what Excel could handle. Agents are going to work in the same way, just with a massively broader application surface area. I only see deeper tech - like databases, frameworks, compilers, etc, which aren’t feasible for an agent to build on the fly or simulate - being software we need to build.

I also think that rather than selling software, we will be selling services which may encompass software and agents together. Customers will expect things that are easy to use and highly effective. They will shirk products with long, complicated onboarding processes and which have complex dashboards and settings to navigate unaided.

I thought I’d try very quickly to make an agent that built a semantic layer, after locating a gold layer style table from a transformation repo like the SQLMesh one I made in December.

Here is my instruction for the agent:

SQLMesh is a data transformation framework used to transform data from raw to usable analytical datasets. It produces a directed acyclic graph of transformation models which are SQL queries. Some of these models are appropriate to be parsed and used to create a semantic layer. The models which are like this are often the right hand leaves of the DAG with no children and which join tables together and aggregate numeric columns to calculate metrics.

The user using this agent will provide a github repository which will contain a SQLMesh project with such models.

Automatically locate one or more appropriate models from the repository to convert into a semantic layer. Use Cube’s semantic layer YAML standard. Here are docs on SQLMesh and Cube:

https://sqlmesh.readthedocs.io/en/stable/concepts/models/sql_models/

https://sqlmesh.readthedocs.io/en/stable/

https://cube.dev/docs/product/introduction

https://cube.dev/docs/product/data-modeling/syntax

Then output the semantic layer using the joined tables from the models selected as Cubes. Create Cubes with measures, dimensions and joins. Generate descriptions and types as per the docs.

Initially, it didn’t split these gold style queries into different Cubes which joined to each other, presented in a star schema. So I shared the docs on Cube Views with it and gave it a bit more guidance:

use views as per https://cube.dev/docs/product/data-modeling/reference/view to make a view per query chosen, where the cubes are the tables joined in the query rather than just using the final tables. The idea is not to rely on the joins happening in the query but to express them in the Cube semantic layer and arrange them using views.

It then did this well but seemed to have hallucinated a “where” property that Views do not have for filtering.

are you sure views can have "where" parameters?

Then it got some of the dot notation for expressing join paths in Cube Views wrong.

some of the join paths are incorrect in the views as they should take the form of core_cube.other_cube apart from the core_cube which is alone

Then it got the names of the possible joins in Cube wrong. If you remember in my original series building this, the same thing happened because of legacy Cube functionality and old docs that have clearly ended up in pre-training material. It may be the case that these names would work but anyhow:

cubes have the following parameters for joins https://cube.dev/docs/product/data-modeling/reference/joins have you got this right in your output?

At this point, I can’t really see anything particularly wrong with the complete semantic layer it generated. The Views, Cubes with their dimensions, measures and joins look good…. again, building this agent took about half an hour5. This time I had to correct it a bit, but this is a more complicated task that requires more precision in output. However, these instructions are now stored within the agent. It won’t have the same problems next time. Another thing to note is that this time, I got the agent to automatically pick the tables to convert from the project, which adds complexity6.

I can see the cost of migration between analytical tools that have proprietary semantic layer code go to zero. Every tool will offer agents that can translate the code from other formats to their own. Their ability to use tools means that users won’t even have to copy/paste anything - the agents will copy the code themselves, translate and then store using an API or CLI in the new tool.

Clearly, it could just as easily have built it in dbt too.

also used Warp for part of his workflow.They are even better at reading code than writing code which is unsurprising. Existing code can have one meaning, whereas there are many ways to intepret a request and many ways to write code to solve it.

Especially with the capital being thrown around in 2021 and earlier.

Unless the moat is the data generated by the agent, which is then used to make the agent better or to train new LLMs that will power better/cheaper agents. This is Windsurf and Cursor’s moat.

This includes me copy/pasting and summarising what happened here.

Interestingly, it did this with ease and no need for correction. This is easy for a human experienced with SQL to do, but hard for software to do deterministically.