Dabbling with Dagster - Part 2

Rebuilding a DAG

In my last post, I took a first look at Dagster Cloud Serverless and I was impressed.

As I mentioned at the end of the last post, I’m going to try to recreate the Airflow DAG that I created in my series on Airflow.

First, I will attempt to recreate it by trying to ingest the DAG from Airflow. I would be surprised if this works, given this DAG is really orchestrating work to be done remotely in Fivetran and dbt Cloud. It would need to know to use the equivalent Dagster packages and then would complain at me that it didn’t have my credentials set in Dagster Cloud. Maybe this will all work, but I’m sceptical.

Let’s have a try.

One thing to note about this development workflow is that, as I make changes, I have to commit them to my PR branch in order for the branch deployment in Dagster to reflect the changes I’ve made. It’s a bit clunky - if I was using dbt Cloud IDE, I would be able to test that my changes worked in the terminal without needing to commit them. Is it the expectation that I would be running Dagster locally, to test what I’m doing before committing to the branch? This seems to be the case currently, as otherwise Dagster Cloud would need to have an IDE.

Installing (pip) Dagster worked first time on an M1 machine (I have since learned that this was why I had so many issues trying to install Airflow locally before). I had an issue with the version of Python I had installed locally and had to deal with virtual environments to get around this.

There is a helpful example repo, where some DAGs are ingested from Airflow ones. After some wrangling and installing lots of dependencies, including Airflow providers, I got my Airflow DAG ingested into Dagster:

However, as expected, when I try to run the DAG, it fails, as it doesn’t have access to any of the API keys required. In MWAA, I had put this into the connections part of the Airflow UI. It doesn’t look like Dagster will be able to handle connections for Airflow operators that are ingested. I could dig a bit deeper, but I think I’m going to simply say that I don’t think it’s the best idea to ingest Airflow DAGs into Dagster, where you are having to handle external connections.

In fact, one of the things I think Dagster Cloud is lacking is some way to manage secrets and environment variables in the UI. The Airflow UI was good at this. The ideal workflow for me would be to input these into the Dagster Cloud UI as connections and then be able to refer to the environment variables in code. The Dagster docs are a bit lacking here, too. As I am using Fivetran, which is SaaS with no open-source version, I have to be able to manage my API keys.

Having had a great chat with Daniel, Sandy and Jarred from Dagster, I’ve decided to go down the route of using Codespaces for developing with Dagster, as then I can use GitHub repository secrets for both Dagster development on Codespaces and deployment on Dagster Cloud. The credentials will then persist across multiple branch deployments. That isn’t the case with stored credentials in the Dagster Cloud CLI, where credentials are stored against specific branch deployments. I can understand the value of doing this, as you can then use different development credentials but setting it up each time you have a branch deployment without automation seems onerous to me.

…some weeks pass… 😅

Dagster cloud now lets you set environment variables! It even has deployment scopes, so you can choose whether you only want the environment variable in certain or multiple contexts 🦾.

In the process of using Dagster, I learned how to use Python virtual environments for the first time and it was, surprisingly, easier than I had expected. Daniel from Dagster gave me a few lines to run in my terminal to set one up and when I saw what it created, it all clicked! It feels like making a different folder structure for the version of Python you want to run and repointing running from here, rather than from the default folder.

This is one of those things that felt really scary before - I thought there would be memory and CPU allocation to manage and I had no idea how to do this, but this is because I’d conflated some of the properties of virtual environments with virtual machines. Shout out to the Brooklyn Data folks who made dbt-env to deal with needing different virtual environments per adapter and dbt version!

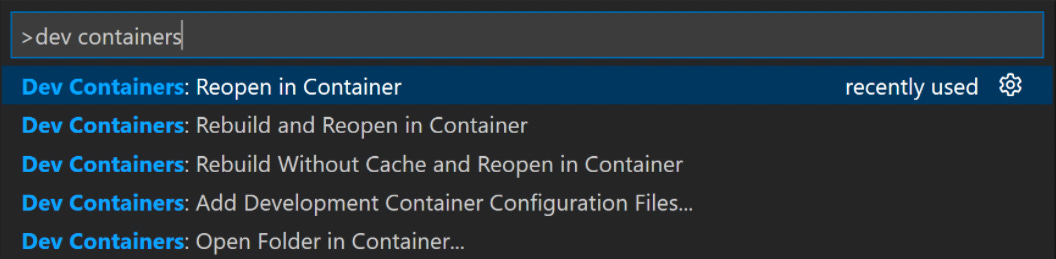

Github Codespaces now offers 60 hours of free usage for anyone! Making a dev container using the VS code dev containers extension is very straightforward and then you don’t have to worry about environment issues, storing secrets on your machine, and it also means you can develop in Dagster on any other device. Plus, anyone else can then run the repository using Codespaces or remote containers, too!

Even with the ability I now have to use environment variables in Dagster Cloud and my GitHub repository, it still looks to be very difficult to get my previous Airflow DAG, that I imported into Dagster, working. The Fivetran Airflow provider is designed to work with the Airflow connections manager and I can’t see how to pass through API Key and Secret into the operators and sensors. Too hard, let’s move on. If you read this and know how to do it, drop a comment please.

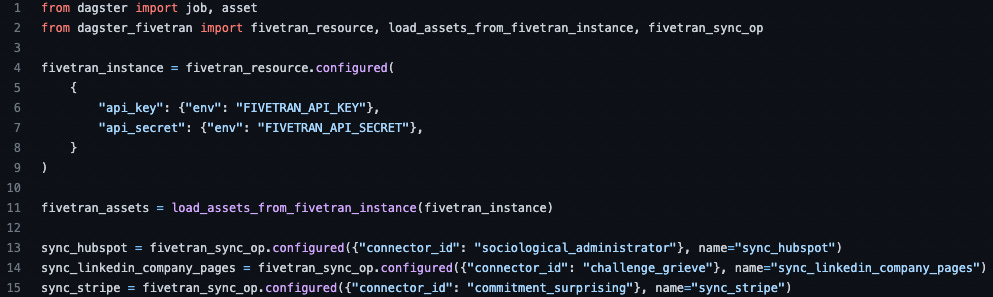

So let’s get the Fivetran syncs into a new Dagster DAG. I won’t go into the detail of how I had to code to build the DAG - you can see them in the commits of the repo. Here are some screenshots of the core pieces:

There are some additional config/init things you need to set in the repo, but all of the interesting parts are above. As you can see below, just by setting the Fivetran ops as tasks the dbt Cloud job should start after, the dbt Cloud job inherits these as steps to be run beforehand. You schedule the entity you want to run - in this case the dbt Cloud job - and it runs what is needed beforehand, without you needing to consider it. I think this crudely displays Software Defined Assets in principle, but I’ll look to explore this in finer detail in the future.

Dagster can also load just from the repository file (similar to how Airflow can work from one DAG file), which is convenient for prototyping and tiny projects. However, Dagster have a project structure recommended for production deployments which splits entities (asset, jobs, resources, sensors…) across a file structure. I opted to use this as it seems more clean, scalable, and modular. I wanted to experience what developing in Dagster would feel like in a normal team setting.

You do need to learn a bit more about Python, currently, than you would with Airflow. I pretty much managed to write my Airflow DAG correctly the first time by copying the Fivetran example, but it won’t be that quick first time around with Dagster (although it may be for you if you pinch my code, feel free). However, having spent some time slacking with Daniel to figure it out, I feel pretty comfortable to extend it myself and ask on their community slack if I get stuck. There is a CoRise course on it too, which probably would have made this easier.

The run took the same 13 mins it did on Airflow. With Dagster you pay per minute per operation active while a job is running. In my case, there is a period of time where all three Fivetran syncs are running and therefore I’m paying for three operations concurrently. So my original daily estimate of 40 cents was off but it still won’t break $1 a day, compared to about $12 on MWAA. I’ve destroyed my MWAA instance and look forward to never having to use it again.

Another advantage Dagster has is not needing the sensors I had in my Airflow DAG - the Dagster Ops have this built in, you can see them polling the relevant API in the logs to confirm completion. This is a substantial bit of simplification if you consider how big some production DAGs get.

The log output from Dagster is excellent - it shows all of the tables being updated by each Fivetran sync (there can be a lot for some, like Hubspot), once the dbt Cloud job has finished, the metadata is retrieved from the dbt metadata API, parsed and put into the logs model by model, which is also great for debugging! I’m impressed by this. There is classification of log type and the ability to filter it by event type or severity of error.

Given Dagster has the ability to display error severity by dbt model, the alerting from Dagster about dbt jobs is likely to be superior to what you get from dbt Cloud, which only shows you the state of the whole job and you have to click through into the app to see more detail. Even then, you have to scroll to the bottom of the logs to see the errors together; Dagster has the ability to show you each error separately. I imagine you’d also be able to understand which part of a multi table sync failed with Fivetran or another ELT provider. This fine-grained alerting is invaluable to a data team.

Next time in this series - a deeper look at Software Defined Assets and a wrap up.