Open Semantic Interchange

Not too little, not too late

Over the last few years, I have really wanted to have an open standard for semantic layers. Something that would make semantic layer definition code (probably YAML) portable, to be used between BI tools, standalone semantic layers and other data products. In a way, open-source Cube has been this in the past, it is a very well-used open-source project with known users in pretty much all big tech companies. However, it has never had enough support from other BI tools which, along with the drive towards AI, led to Cube pursuing their own agentic BI tool in Cube Cloud.

Nearly Headless BI

The semantic layer came of age over the course of two weeks at the Moscone Center. Both Snowflake, with semantic views, and Databricks, with metrics views, have essentially implemented a semantic layer with a compiler, which I predicted (around this time last year) that they would do before the end of 2025.

I have also been critical of newer technologies like Malloy, which have similar aims to semantic layers but seemed less practical. My thinking was that getting users to learn a new DSL when we already have SQL, YAML, MDX, DAX… was a bad idea and doomed to fail. However, with AI, perhaps value can be had. If Malloy is like a tool to be used by AI and core definitions also maintained by AI, then no new DSL needs to be learned by any human.

SQL Forever

Recently, the Malloy team moved to Meta, from Google. Malloy provides a language to express analytical and transformation queries that compiles to SQL:

Out comes a new standard, spearheaded by Snowflake, Salesforce(Tableau) and others - the Open Semantic Interchange. Surely, this is great news. I should be really pleased, right? michael rogers sent me the article announcing it and asked me if I thought it was a big deal before I had seen it on LinkedIn1 . The thing is, I’m not that bothered, and I had to think for a moment as to why. I’m sure it would have felt like bigger news had I still been at Cube, but even so… Then it dawned on me - this level of standardisation is much more valuable when human beings are having to learn the DSL. It makes them much less worried that they are learning or deploying something that ends up being niche or unadopted.

I don’t really believe that semantic layer code will be managed by humans in the near term. Now that I have great tools like Claude Code and Codex CLI, I don’t really find myself writing semantic layer YAML any more. Even when they make mistakes, I feed the semantic layer compiler errors back in and let them fix the problem.

I recently had to convert some dbt Metricflow YAML to Hex’s new semantic layer YAML standard. Most semantic layer YAML has ended up very similar to LookML, including Cube, Hex, Count, Snowflake, Thoughtspot and Databricks. In fact, Metricflow is one of the more unusual syntaxes out there. So I typed Claude into Ghostty… I showed it the Hex and Metricflow specs, I told it where the files to convert were, and off it went. The first two times, upon compilation in Hex, there were errors but I fed these back into Claude Code and upon the third attempt, generated correct Hex semantic YAML. I then asked Claude Code to make an agent markdown file from what it had learned, so that I could make an agent to do this for me should I need to do it again.

My point is that the cost of translation between different standards, especially when they are so similar, is next to nil. That doesn’t mean there is no value in an open standard that is well-adopted. It’s just that the value is diminished. This is partly why I’m not that excited about it. The driving force behind this new open standard is a desire for increased adoption of semantic layers, driven by the clear demand for AI access to data. However, this very driving force is also why the open standard isn’t as valuable as it could have been in the past.

Enterprise demand for AI capabilities drives the urgency behind OSI. Snowflake reported that nearly half of new customers in Q2 fiscal 2026 chose the platform for AI capabilities, with over 6,100 customers using its AI offerings weekly. - link

For me, the peak moment when an open semantic standard could have been most valuable was soon after Looker got acquired by GCP. Looker was gaining market share fast before the acquisition, and afterwards users were concerned with lock-in. Companies like Lightdash have succeeded, in part, by addressing these concerns. In hindsight, an open-source BI tool that took a LookML repo as-is and functioned fully with its definitions could have been a great idea.

There are also notable exceptions in the launch partners in Databricks, AtScale and Microsoft. Microsoft, through MDX and DAX, have over half of all semantic layer usage easily. They will never sign up to such a standard - it’s not in their interest. I think this is true of GCP too. That doesn’t mean an open standard won’t work without Microsoft, but I would expect that both Databricks and Snowflake would adopt it for it to be meaningful, as they are the two big independent forces in data away from GCP and Microsoft.

I also know that both Snowflake and Databricks expect semantic layer definitions to one day exist as extensions to their warehouse SQL. They may well support a YAML format too, but it shows they are hedging their bets and would rather lock in customers to something specific to their warehouse for retention and performance.

The standard could still be useful if it makes it easy to move definitions between commonly used tools like Hex and dbt, and, indeed, tools commonly used together.

I also doubt that the same YAML in the open standard will be compiled to exactly the same executable code between any given permutation of compiler2 and underlying warehouse. It will probably be the same most of the time and produce the same results most of the time, but not all of the time. I think this inconsistency will feel similar to how adoption of ANSI-SQL varies from database to database and how the same SQL on the same data on different platforms can output slightly different results.

Participant-specific model mapping and read/write code modules: These modules will convert OSI models to participant-specific models/languages and will be part of the Apache open-source project.3 - link

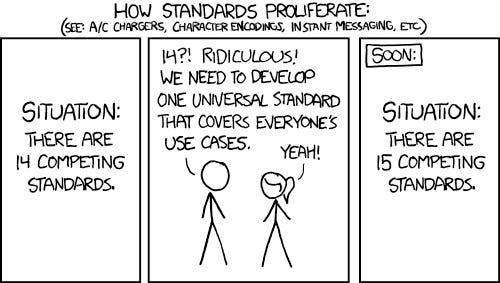

I am hopeful the creation of the standard and adoption of it by vendors will improve things, but to me there is a clear XKCD 927 risk.

Oh well, I’ll see it in a couple of weeks.

After all, an open semantic interchange standard doesn’t necessarily mean there will be a shared compiler.

There will inevitably be different levels of support from different vendors. Some of this will be because of genuine compatibility reasons, some will be lack of commitment and some will be because others are not participating in good faith and just see this as a rubber stamp or lead source.

https://imgflip.com/i/aa4nxo

Translation is easier than ever and if you are in a more modern company where the barriers to adapting the tech stack (even things like deploying Claude code at scale) I agree the value is minimal because you can build something that does this. But in large established enterprises who are still transforming and often have multiple BI tools - a standard that improves interoperability removes one friction point. Right now so many tools don’t even expose their semantic layer well in any format - sometimes you can only build it through clicks in a UI. Which makes it hard to adopt because you can’t build a semantic layer all in one tool, but it’s tricky to make them talk to each other and the warehouse. So moving towards all code based reduces friction. And the closer the formats are, even better.