I met Rohan Thakur through the Lightdash community slack a few months ago, and have since had a chance to meet up in person and stay in touch over slack and email. Rohan was part of the original data team at WeWork, and is now leading the analytics and analytics engineering teams at Collectors.

Rohan recently deployed Lightdash at Collectors, but began to look for a more powerful multi-purpose semantic layer to use with Lightdash and for many other purposes. I’ve helped Rohan a bit with some guidance on the choices out there, but he did a fantastic bit of research where he compares the dbt Semantic Layer and Cube. I’ve had my thoughts so deep into semantic layers for a while that I may be biased one way or another, so it’s great to have another perspective. Here is a link to Rohan’s original doc, but I’ve excerpted most of it below.

What I really value about Rohan’s perspective is that he is savvy and experienced enough to stay on target, and to choose what is best for his org and use cases. He has no reason to be particularly biased in any direction or towards any vendor. Like me, Rohan is probably quite fond of dbt and the impact it has had on our careers. The only real bias here would be that if dbt had a good option that integrated well with current transformation processes, then it would be an easy choice. It’s the macbook sticker we already have, the tool we’re already using and the community we love and have been part of for years.

Excerpts from Rohan’s document:

Context

…the landscape of “metrics” in the data stack has changed significantly. There have been a few changes that are specifically relevant:

The deprecation of dbt’s metrics package: dbt deprecated their dbt_metrics package which we had deployed as our metrics layer. Therefore, we are forced to change our strategy here as it is not supported by newer dbt versions moving forward. By remaining on an older version of dbt, we miss out on key features included in their new releases.

The advent of dedicated semantic layer products: dbt Labs has since then gone on to acquire Transform and introduce a Semantic Layer product built on Transform’s MetricFlow. This is meant to be their replacement for the dbt_metrics package although it’s not quite a 1 to 1 product (more on that below). In addition, players like Cube (dedicated Semantic layer product) have gone on to gain adoption, and we have seen interesting examples of players in the data space that are integrating with the Semantic Layer or built on top of Semantic Layers (e.g. Push.ai, Delphi).

Growing Use-Cases beyond BI: As Collectors matures into a data-driven organization on a unified data platform, the use-cases from data analytics have started to grow beyond BI. Whether it is dedicated applications built on our data, LLM functionality built on our datasets, or having other external non-BI consumers be able to query our data in a consistent manner (e.g. notebooks), we are moving towards a future that tends towards more data products beyond reporting and visualization, though BI will always be a foundational and critical use-case for us.

Given the evolution of the space and our growing data maturity, in this document we evaluate whether a dedicated semantic layer product would benefit our company…

…Architecture

As we can see in Figure 1 and Figure 2, semantic layers sit between dbt transformations and the final interface of data access - whatever this may be. The two semantic layers we will evaluate below are dbt (MetricFlow) and Cube.

Without going into too much detail in this document about each vendor’s specific implementations, (we link the documentation below in the References section) suffice it to say, for now, that a semantic layer is generally defined as a set of YAML files that represent your key metrics, entities and the relationships between them. While dbt uses terms such as ‘entities’, ‘measures’ and ‘metrics’ within semantic models (one-to-one with dbt model), Cube utilizes ‘cubes’ (one-to-one with dbt models) and ‘views’ (join relations specified). Both implementations are quite similar conceptually and ultimately allow you to define files for your key entities (e.g. orders), the aggregations that are important to you (e.g count_orders), the metrics that need to centralized (e.g. monthly shipped orders), and the relationships with other entities (e.g join the customers cube/semantic model on customer_id).

These semantic objects can then be accessed through the vendor’s integrations. Both dbt and Cube provide API access, as well as a list of vendors that already integrate with them in order to access your data.

Comparison

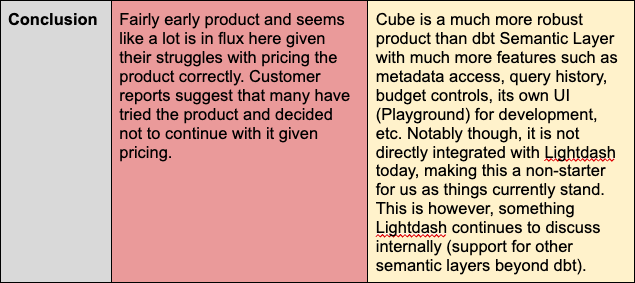

Below, we perform a brief comparison of features between dbt and Cube semantic layers.

dbt Labs is aiming very clearly at enterprise now, as is evident from Rohan’s findings, and has been communicated by dbt Labs. They believe that winning large enterprise deals is the best way they can progress towards profitability and exit. This may make buying dbt Cloud to use the dbt Semantic Layer impractical for many. SMB and startup data teams may feel betrayed by this, and the lack of open source.

What I would say is that dbt Labs didn’t need permission from anyone to do this and need no forgiveness either. I’m grateful for how they have transformed data work for so many. My career wouldn’t be what it is without the impact of dbt Labs on the industry.

Their switch of focus to enterprise may be at the cost of leaving some smaller customers behind, or facing the ire of those who think the whole party should be free. I say Godspeed to dbt Labs, go get it! I look forward to seeing dbt Labs having their own moment here:

One impact of dbt Labs’ focusing on large enterprise is that the buying decision is then simple. I’m excluding semantic layers bundled with BI tools, whether this is proprietary in nature or open source with only the parent BI tool able to use it… the effect here is still lock-in either way. You can see this with Rohan’s use of Lightdash above - even though it’s OSS… only Lightdash supports it, so you end up only being able to use Lightdash with it even though they haven’t forced this outcome.

For large enterprises who are dbt Cloud customers or want/need liability shift1 for transformation and SL in one place, use the dbt SL.

For large enterprises who aren’t dbt Cloud customers and want full MDX functionality in their SL, use AtScale.

For large enterprises who want to use the same SL to serve their external data products and internal analytical needs, use Cube. Any other large enterprises not captured by the first two groups also belong here.

For smaller companies who want to use dbt SL no matter what… the choice is clear.

For all other companies, Cube is probably the best choice. It’s superior from a technical POV from Rohan’s (and our) experience, it’s OSS including the whole way to metric compilation to SQL, it has a great cloud offering (available on-prem too) that you can guarantee won’t be unreasonably priced, because of your available choice to go open-source. Essentially, the TCO of running Cube yourself will usually be higher than Cube Cloud. It comes bundled with DuckDB if you don’t have a data warehouse. It supports the widest range of data sources. It has a Postgres compatible SQL API, as well as the best REST and GraphQL APIs for a semantic layer that we have seen.

A few months ago at Delphi, we decided we needed to bundle a semantic layer and we chose Cube, mostly for the reasons above, but we have now also proven that it is the best available to use with AI.

Honourable Mentions

I haven’t had a chance to play with GoodData, but it looks interesting and with a Super Data Bro as VP of Product Strategy, they should be heading in a good direction. From what I can see, it offers a SaaS standalone semantic layer except it doesn’t connect to third party BI tools and instead offers one itself. This makes it more like a semantic layer bundled with a BI tool, so I wouldn’t choose to recommend it. It seems like Looker with better headless functionality, and focus on the semantic layer over the BI part. I could be wrong with some of this detail, so take a look for yourself or take a ride on Yoshi. They do seem to have open-sourced some front-end and viz components, but it doesn’t seem like the core of the semantic layer, including compiler, are open-source.

GCP also offers headless LookML now, but it’s locked in to GCP and technically inferior to other offerings, and innovating at a slower pace to boot. If you’re all in on GCP, it’s one to consider, but not choose by default.

Another choice for large enterprises is Stratio. It has won some large European banks as flagship customers and I would expect a minimum contract to be something like EUR 500k. Definitely worth a look if you work for a large EU enterprise, especially if you have a heavy compliance burden for a new vendor.

There are also a few new startups out there trying different or niche approaches, but none are as advanced or mature as the choices above, to be worthy of recommendation.

Lack of competition below large enterprise is actually a problem in the semantic layer space, with Transform being acquired by dbt Labs and taken up-market.

Enterprise-focused tools are most likely not appropriate choices for SMB and mid-market companies, you can end up paying much larger amounts as enterprise sales approaches require higher deal sizes. Future features will also be geared towards large enterprise and not SMB/Mid-Market needs. A choice of a semantic layer is a long-term one, that will likely last beyond a single data team leader’s tenure. It’s a choice that needs to be made to fit this long-term view. Enterprise contracts will increase in price significantly at renewal, too, as this is the easiest way for enterprise-focused SaaS companies to grow their revenue. I have heard of data team leads who have made choices like this recently, and had their fingers burnt on renewal.

The one major problem we have observed with standalone semantic layers, is the lack of native support from BI vendors (this is why AtScale has to look like MDX to a BI tool). Until now…

From Hamzah Chaudhary - Co-Founder and CEO at Lightdash:

Lightdash does have an open source semantic layer built-in that a lot of customers use, and for a lot of data teams, this fits the bill and we want to keep improving that over time.

Our mission has always been to be the most flexible BI platform, and we’ve shown that by our current integration with dbt Semantic Layer!

We’d love to do the same with technologies like Cube too, while we don’t have an integration today - we know more community members may be interested in trying it so we’ll build support there too.

If you have any questions about this topic or any of my other posts, ping me here:

One problem with using open-source software for enterprises is liability. If they run the open-source software themselves, or via a third party tool (eg using with Dagster) that does not bear liability for it, if something goes wrong as a result of open-source then the enterprise bears all liability - which their legal teams usually aren’t happy with. One strength dbt Labs has with its dbt Cloud offering is that, because they can reasonably be liable for any defects with dbt-core and are the only company that can be, they can be liable for dbt transformations and semantic layer in one vendor offering.

The most important features of an enterprise product are legal and infosec compliance.

Great article! The challenge with both options is the need to manually document the semantic layer. AI can help... a little... but you need a dedicated solution for it. I actually just share some content about it today: https://journey.getsolid.ai/p/semantic-layer-for-ai-lets-not-make?r=5b9smj&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

Thanks for linking the article from Rohan, this is stellar!

Sadly it looks like not much has happened since 2022 when I wrote this Metric Layer overview, except of course the acquisition of Transform/MetricFlow.

Linking the original article in the case anyone interested:

https://medium.com/@vfisa/an-overview-of-metric-layer-offerings-a9ddcffb446e

It might be interesting to get Rohan's opinion on other metric layer technologies mentioned.