Standard Semantics

Echoing the call for an open standard for the semantic layer

I don’t know exactly why this is, but I keep finding new companies and founders who are building semantic layers. Perhaps this is because Delphi integrates with semantic layers and they are finding me. Perhaps it’s because dbt Labs are driving the conversation forward and validating the segment. Perhaps it’s a sign of the times and the maturity of the data world - we’re coming full-circle back to data modelling and the natural way to expose this, with current tech, is the semantic layer.

The problem, though, is that if we end up with many semantic layers in the wild that all look and interface differently… many of them will simply fail. If we had a single standard for how their interfaces should work, then the strengths and the features of each individual semantic layer wouldn’t be undermined by an interface that nothing supports.

I wrote these first paragraphs above before heading to dbt Coalesce, and it turns out others are having the same thoughts:

Artyom’s talk, above, is also available as a blog post.

Michael and I spoke about this topic while we were together during Coalesce, and we realised that we are uniquely qualified in being familiar with so many semantic layers. Only us and the likes of GigaOm/Gartner would know the segment as a whole so well, and really only we would know how this many semantic layers worked at a technical level:

How are entities, metrics and dimensions defined?

How do the APIs for the semantic layers work?

What features are available in each of the semantic layers?

There are many consultancies out there who deploy semantic layers, but they usually specialise in one or, at most, two of them - they want to engage at an expert level and are often tied to a cloud/vendor relationship.

We have also felt the problems associated with lack of standardisation more acutely than anyone else we can think of.

Why not on top of SQL?

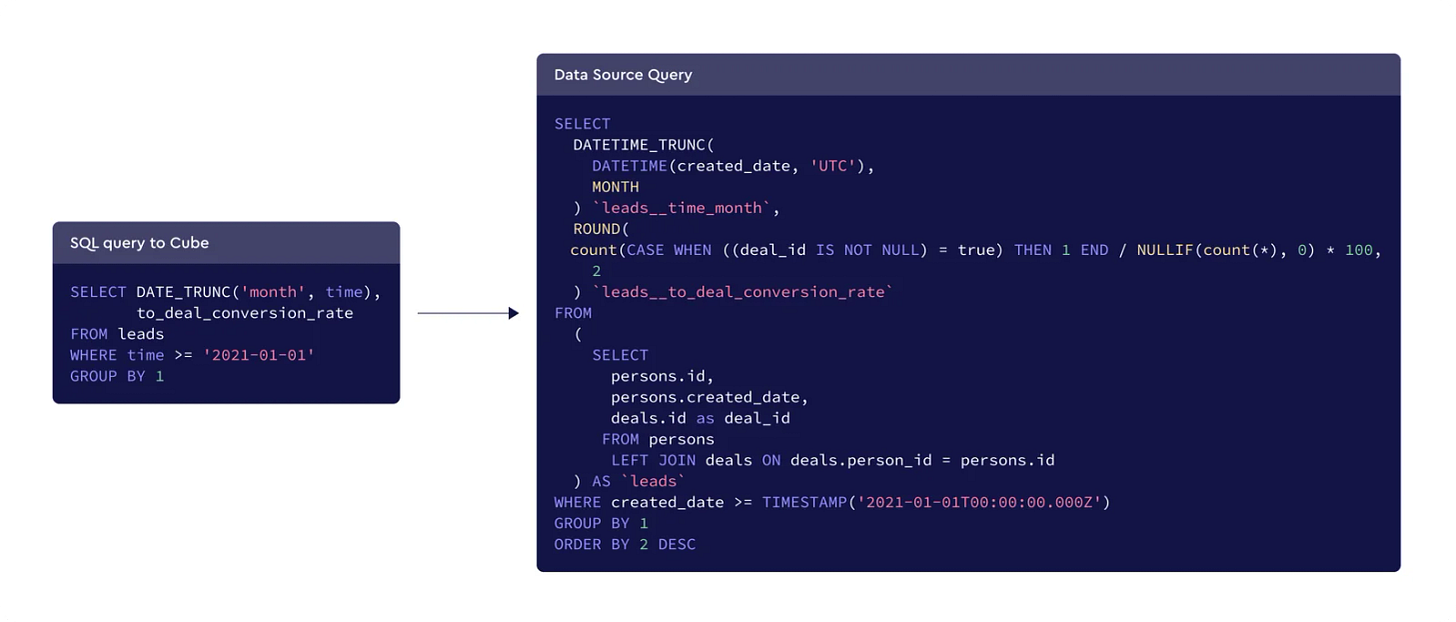

One of those most common APIs that semantic layers offer are SQL APIs. The reason being that all BI tools, and pretty much any other consumer, will be able to support SQL. The semantic layer simply appears as a highly-supported database like Postgres.

The problem with this is that the SQL isn’t really normal SQL. It’s something that looks as though it could be valid SQL and can be passed through successfully to the semantic layer, which will then compile it to the real query.

You then end up with queries like the one above, where you are referring to tables that don’t exist: they are actually objects in the semantic layer.

SELECT status, count FROM orders

SELECT status, SUM(count) FROM orders GROUP BY 1

SELECT status, MEASURE(count) FROM orders GROUP BY 1

https://cube.dev/docs/product/apis-integrations/sql-api#aggregated-vs-non-aggregated-queries

Or, you end up with queries like the ones above (which are all equivalent) where you are either:

Referring to a measure as a normal field in the SELECT, which is not aggregated. This is strange because the SQL doesn’t look like an aggregation query but what will happen after it is compiled by the semantic layer is an aggregation query.

Referring to a measure by wrapping it in a standard SQL aggregation function and doing the group by. This is also strange, because the SQL doesn’t reflect what is happening afterwards. You could have had any aggregation function there and it wouldn’t really matter, you are just denoting that the field in the aggregation function is a measure for the semantic layer compiler.

Referring to a measure using a special measure syntax and then having a group by, too. Standard SQL does not have the concept of measures or metrics, so the SQL generated is unlike any real SQL.

MDX also tries to solve this in a similar way:

SELECT {[Measures].[Unit Sales], [Measures].[Store Sales]} ON COLUMNS, {[Products].children} ON ROWS

FROM [Sales]

WHERE ([Time].[2010].[Q1], [Customers].[USA].[CA])

https://www.atscale.com/blog/reducing-query-complexity-with-mdx-and-atscale/

Regarding point 3 above, Julian Hyde - Staff Engineer at Google and original developer of Apache Calcite, makes really good arguments as to why SQL should be extended to support measures, in his excellent Data Council talk, below. His arguments for why this should be all resound with the goals I have for the semantic layer.

In all honesty, I don’t know why the likes of Snowflake, GCP(BigQuery) and Databricks haven’t offered a new object in their SQL dialects which lets you define a measure. It would work in pretty much all the BI tools, as they all integrate directly with those data warehouses. Perhaps the lack of a standard format for this in SQL is the problem - all three have already had to diverge from ANSI SQL in order to offer all the features of their platforms. Perhaps Calcite could be the format they all adopt together, and I would be very happy for that to happen!

I also think that entities need to be able to be defined in a semantic layer, so simply extending SQL to handle metrics isn’t enough.

What do we want to enable?

Whilst all of these SQL-based methods above have value, they really only offer a way for someone who knows how to write SQL, and understands the semantic layer, to write simpler, more consistent queries. It doesn’t help non-technical users access the semantic layer. Non-technical users have knowledge of entities and their associated attributes and metrics. We need to enable them to request these without being concerned about the underlying query engine or data structure, and without needing to know how to write SQL or another machine language.

They want to ask a question like: “What was my revenue by product last week for Oregon”.

I know that a good analyst will say:

What do you mean by revenue (gross, net, EBITDA…)? An expectation for a semantic layer standard should include hierarchies, where there is a parent metric, like “Gross Revenue”, which is the most basic and most commonly-requested variant of a metric. Then, there can be children, which are specialised variants of the parent metric, like “Net Revenue” or “EBITDA” or “Revenue excluding this non-standard product line”. Thus, the semantic layer provides clarity for what someone means by the metric - if they just use a generic term like “Revenue”, they will get the parent metric “Gross Revenue”, unless they say otherwise. This can become a communicated standard way of operating - a set of understood assumptions to be used in an organisation.

What do you mean by product (products of the order, our business line…)? As a semantic layer defines entities, the metric requested is associated with an entity. For example, “Revenue” could be associated with an “Order” entity. It, again, could be the standard way of operating: to take a dimension associated with the same entity as the metric. Therefore, the products of the order would be chosen by default unless specified otherwise. A semantic layer standard should allow you to understand the distance between entities in the data model, similar to distance between nodes on a graph, without needing to compile the semantic layer - therefore available from an endpoint. As Revenue and Products are on the same entity, Order, the distance between them would be 0 or at most 1, if Product was on the Order Line entity instead.

What do you mean by “last week” (business week, calendar week, including holidays, Sunday to Saturday, Monday to Sunday, just working days…). Many semantic layers today allow you to set standard business operating periods, such as week and year - it can be accepted that the period requested is the accepted standard for the business, unless specifically stated otherwise.

When you say “Oregon” do you mean (customers from Oregon, retailers from Oregon, inventory from Oregon). This is where the graph distance method above doesn’t work - it’s likely that that the customer, retailer and product entities are all 1 node away from the order. It’s also likely that the order would have a State attribute (often taken from the shipping address, which would belong to the customer at the time of the order. This could be different to the state of the customer before or after the order), the order could also have another state for the retailer. This is where it’s genuinely impossible to know the answer, and you need to clarify or allow the user to correct afterwards, by explicitly telling them which field you are pulling.

LLMs/AI will be part of the workflow that enables access to data in the future - to help non-technical users answer questions like those above. You might say that this isn’t certain, but for me it’s simply a question of time. It’s not something that will happen after you retire, it’s just around the corner.

The semantic layer interface standard that best suits LLMs is at a huge advantage.

LLMs and Generative AI systems are going to need a standard interface to access our organizational data. - Jason Ganz on AE roundup

Objects not strings

If you come at this from the angle of what we want to enable as described above, these kinds of questions and more complex ones over time - we want the format of the request at the semantic layer interface to be very restricted and as close to the natural language request as possible. Inserting objects into strings that somewhat look like SQL doesn’t work well. LLMs have been trained on plenty of SQL, but this is standard SQL based on a normal db schema, without abstraction over the schema or strange syntax.

SQL is also pretty freeform: you can end up with the same results in many different ways. This is why getting an LLM to write consistent SQL is not going to happen very difficult. If there was only one way to correctly make the request, this would massively increase the likelihood of the LLM translating the natural language query into this request successfully. The only part of a semantic layer request that should be a freeform string is the value of a filter, where the dimension being filtered is of string type. Everything else should be an object or part of the standard structure of the request.

I asked Michael to talk about the benefits of supporting a REST HTTP interface (which can be GraphQL):

Semantic layers should allow requests to be sent as a REST HTTP request. The request should take in a structured representation of the query, like that shown above, and return data (or an error) in a consistent format.

You might ask “why not SQL”? Many semantic layers support querying them via SQL, and we don’t see this going away. However, supporting a REST interface lets people easily build data applications on top of semantic layers. We see data applications as a growing use case for semantic layers over the next several years.

This can be both internal data applications, as well as external (customer-facing) ones. For example, Cube is often used right now for embedded analytics. Software engineering teams that want to build customer-facing dashboards into their products (for example, a ReactJS application) often use Cube as their semantic layer. These applications are written by product engineers, using languages like JavaScript (or TypeScript), who are used to sending HTTP requests, not wrangling database drivers. They usually prefer to represent queries as structured objects, rather than plain text (SQL), leading to the adoption of tools like ORMs.

By supporting REST as a standard query interface, semantic layers can reach a broader variety of use-cases than just powering dashboards and BI tools. Teams are increasingly moving towards real-time, operational workflows on top of data, and semantic layers will play a key role in that.

Within REST APIs, there are two main options: (1) a “traditional” API where the query is represented as JSON, or (2) GraphQL. GraphQL has some advantages over a traditional REST API, and I won’t rehash them here. However, not all developers are familiar with GraphQL, and some actively dislike using it. It typically requires dependencies that aren’t needed for JSON-based REST requests and it can make applications more complex. A traditional REST API is more universal and may lead to better adoption of semantic layers.]

Modelling Language

Most semantic layers allow you to define your data model, entities, metrics and dimensions in a modelling language. This is typically YAML, and LookML is an example many are familiar with. Cube offers a YAML modelling language which is very similar to LookML - so similar that they have also offered a tool to convert LookML.

I’m not against having a visual way to define a data model, it certainly helps with accessibility and can probably help prevent some errors during development due to the broader view offered. However, I think the standard should require that the semantic layer is able to be defined in code. While YAML has its haters, it seems like it has become the default for these modelling languages, and it is accessible for people who aren’t able to use a programming language. So, it seems like being able to define a semantic layer in YAML is part of the standard.

One interesting example is Propel, who don’t have a modelling language, but every action and definition is possible via their GraphQL API, which is a feature other semantic layers don’t necessarily have. It also means that, while Propel don’t offer a modelling language, they can offer a Terraform provider to define a Propel semantic layer… which is once again YAML. Being able to mutate the semantic layer via REST/GraphQL is something that could be a good part of the standard, but perhaps on a second iteration.

dbt Labs and AtScale also offer YAML modelling language, but they are distinct from the others, due to how differently their semantic layers work.

Why is it important that we have some standardisation in modelling language?

If we have vastly different modelling languages, it makes it very hard for people to compare semantic layers. It also makes it very hard for data folks to migrate, should they need to. This may seem like a feature and not a bug for a semantic layer, but it could actually just reduce overall adoption, as people are cautious about committing to something where there is no possible exit.

If the languages a similar and easy to move between, then the choice shifts from “ to have a semantic layer or not” to “which semantic layer suits my needs more”. This is ‘raising the ceiling’ for the semantic layer. Whilst at dbt Coalesce, I had great conversations about the semantic layers available and what their pros and cons are. Whilst I was at Snowflake Summit, I mostly had to explain what a semantic layer was and why they should be deployed…

Another great reason for standardisation in modelling languages is that it becomes easy to standardise their use. We’ve seen some great things in the transformation space since dbt emerged as the standard transformation framework - there are now packages to automatically transform data, if they come from a common industry data source like Salesforce or Stripe.

The equivalent of this for the semantic layer is standardisation of how to model data and define metrics, dimensions and entities. SOMA is an example of this kind of standardisation, which could be pushed down into each semantic layer with packages. However, this becomes difficult or impossible with vastly different semantic layer modelling languages.

If data folks could choose a semantic layer that meets their needs, without worry of future migration cost, which provided them a standard data model, entities, metrics and dimensions out of the box, which, because of standardisation of interface, integrated with their choice of consumption tools…. Using a semantic layer could become the norm for data teams, rather than the exception.