Tailor Made

Things are moving fast

A couple of weeks ago, I wrote about the acquisition of Windsurf by OpenAI and how it could be really significant for the speed of automation in coding.

I predicted that Windsurf, powered by custom-trained OpenAI models, focused on the workflows in Windsurf, would have a significant advantage over Cursor, which won’t have such backing from a frontier model producer.

I also talked about how I was excited to see the general release of nao as a similar IDE in concept to Windsurf, but instead focused on the needs of data folks. I described Windsurf, Cursor and nao all as VSCode forks, which is true, but blef highlighted one point that I hadn’t put enough emphasis on - Windsurf and Cursor actually build their own models to fit their workflows and this is the real value of their companies. This is partly why they are so attractive to the big AI companies as an acquisition target; they are not only fellow model builders, but also have a niche dataset geared towards coding that is now uniquely produced by the users of these IDEs.

nao Labs will also have to follow this path to build a company of comparable value to Windsurf of Cursor. It looks like they’ve taken the very first step.

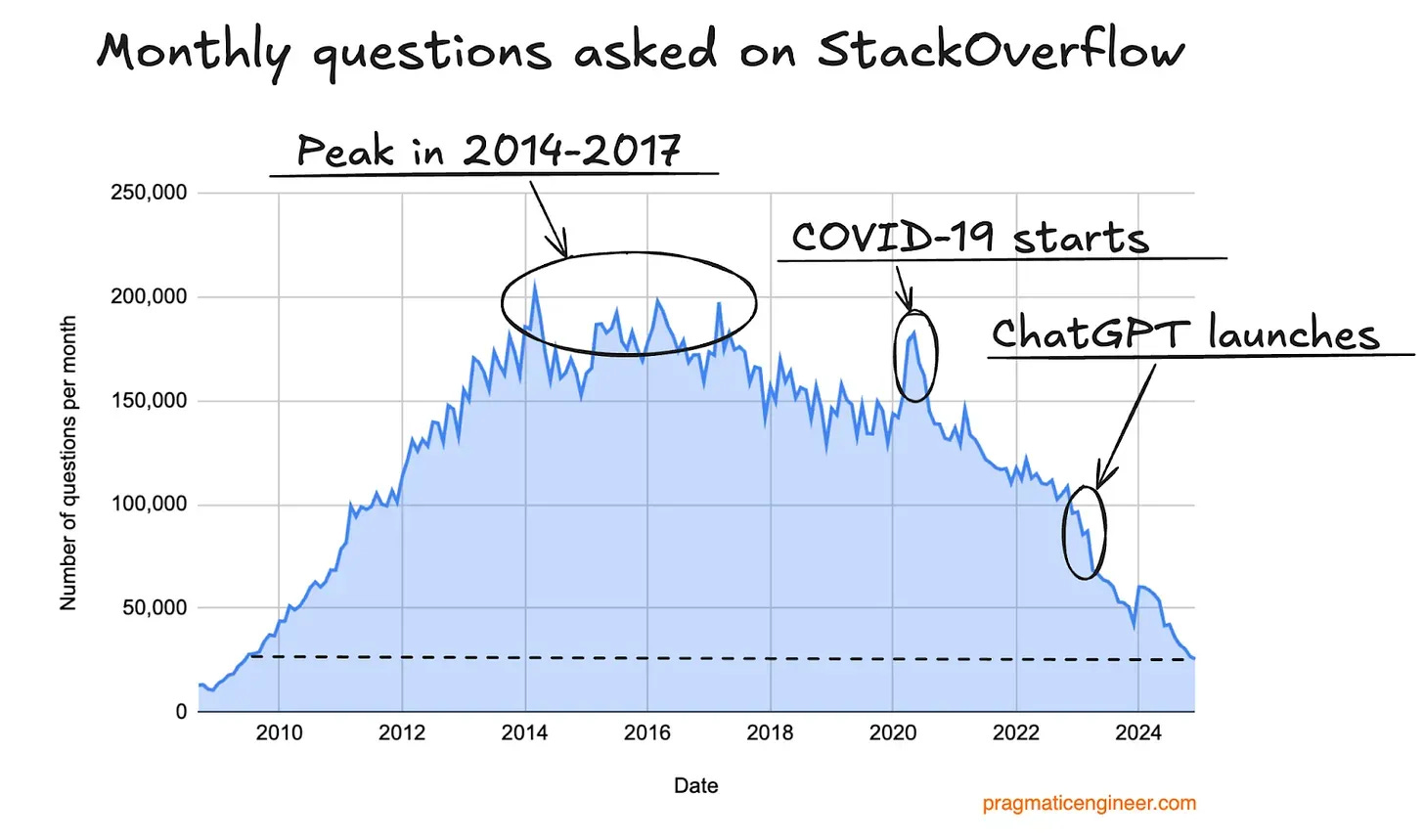

Related to this topic was a really interesting post by Gergely Orosz, on the decline of Stack Overflow:

Back in the day, when I wanted to figure out how to do something in SQL, Python, Go etc and didn’t know how, I would Google what I wanted and it most likely would find a few StackOverflow posts similar to my question. I would always then, to a greater or lesser extent, have to adapt the code in the StackOverflow posts to my situation. It was a great way of learning new methods, as you had to learn the methods shown in order to use them effectively - you rarely could just copy/paste. However, it wasn’t particularly fast.

I can’t remember the last time I looked anything up in the way I described above. I have either used a system like Cascade in Windsurf proactively to generate code or fix something, or reactively used a copilot in the code editor of my IDE. I’m sure many are in the same position, and this is evident in the sharp recent decline of StackOverflow - although you could see it was already beginning to decline markedly post-Covid. I wonder how much of the initial decline is due to saturation? Most of the time on StackOverflow, newer questions were just pointed to previous solutions to older questions. This would therefore eventually lead to a decline in new questions, but it’s clear that the decline was accelerated in the post-GPT3 era.

In my mind StackOverflow lives on, in the solutions proposed by LLMs which have been pre-trained on its content, and in this way it kind of lives forever 🫡. While this is great starting fodder to train LLMs on in order to make them able to generate code and solve code problems, it wasn’t actually designed for the flow states1. Often in an IDE, you will have broken code and want a solution either based on the output of the compiler or an error output in the terminal. Or you will have incomplete code that you want to finish or to extend an existing project.

StackOverflow examples were intentionally as simple as possible, to show someone how to do something very specific. We want AI code assistance to operate much more broadly today and to build/fix many issues in one shot. The best training material now for this use case is the successful interventions that happen inside Cursor and Windsurf. The millions of engineers using these IDEs have provided them with the perfect training dataset to make the experiences even better and also to even train their own models that are specialised to the flow states that happen in these IDEs.

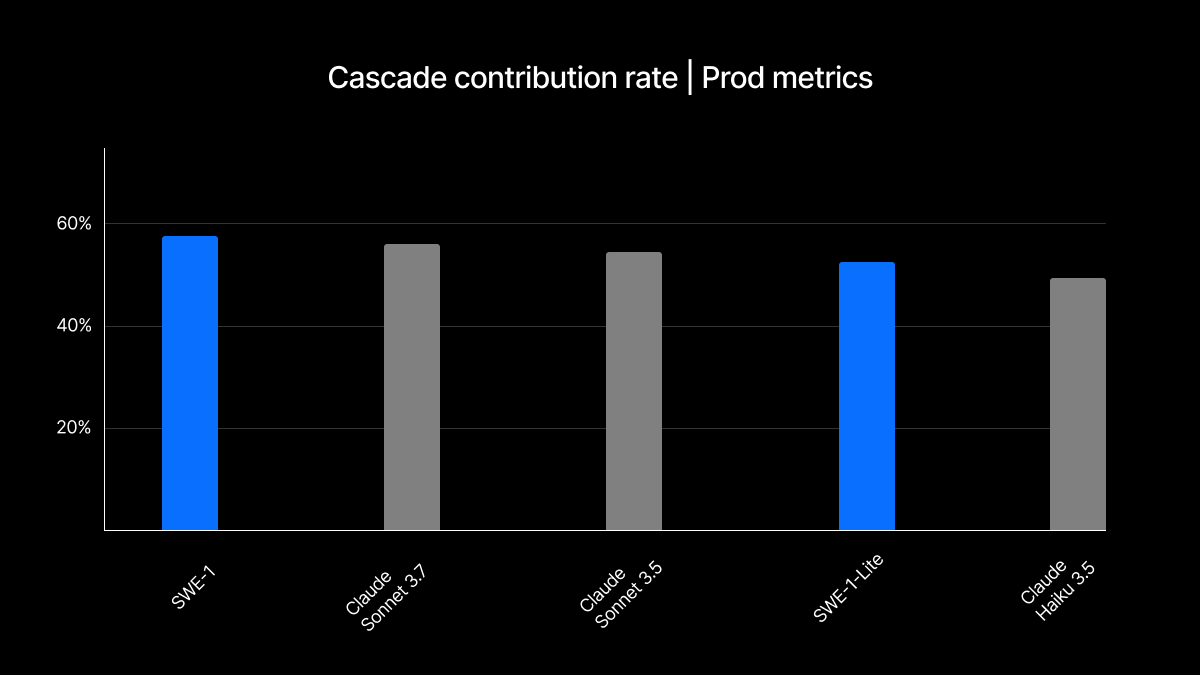

This blog post from the Windsurf team is really worth a read if you use IDEs like it or are otherwise interested. While my prediction, referred to in my previous post, suggested that an OpenAI model specialised to Windsurf flow states would be incredibly significant, Windsurf had been training their own models long before the OpenAI deal.

Looking at how well the models perform, generally on par with the best frontier models for IDE flow states that we’ve seen and used, like Claude Sonnet 3.5+, this actually looks like a big part of the acquisition deal2. I’m sure there will be future collaborations with OpenAI in terms of expertise and sheer GPU power, which were not possible before.

I was watching keenly to see Google’s response to all of this at I/O this week. The Gemini 2.5 Pro is already regarded as one of the best frontier models for use in AI-powered IDEs. There is a lot going on for developers in the abridged version of the keynote below, but one stood out the most to me in regard to the topic of this post. Google unveiled Jules - which people have said is geared towards competing with the likes of Claude Code, which works as a CLI in the terminal.

There is a continuum in vibe coding right now between IDEs like Cursor, Windsurf and nao that give you a lot of control over code, with usual git flow etc, and where AI will build a full application for you with something like Loveable or Google’s own Firebase Studio, where you probably won’t see the code. Jules appears to be a new step in the middle.

With Jules, it appears that you still see the code, but the environment is all handled for you - they mentioned that a container would be spun up automatically to run your codebase, based on knowledge of the codebase. I think this is a really promising approach if it works as demoed - a very big if. I’ll look to try it soon. One of the unsolved issues with using a big codebase in Cursor or Windsurf is getting your dev environment set up3. It’s not uncommon for new engineers to dedicate a couple of days to a week getting this ready on joining a company. It’s a significant blocker for anyone who wants to dip in and out and occasionally contribute.

I keep seeing memes and sassy LinkedIn posts about how these tools make mistakes, and they aren’t valuable. I can’t see how this is a fad; I’m not going back to using an IDE without AI Assistance. It’s just not going to happen, and millions of engineers around the world agree with their wallets.

If you read the Windsurf post on the release of SWE-1, you will understand why I’m using the term flow states. It refers to the handover between human and assistant in the IDE, where one responds to the other in different contexts.

The release of these models, and how performant they were, would definitely have been known at the point the acquisition decision happened.

Cascade, etc, can help by reading your error messages and trying to install needed packages, etc, but it’s not a great experience on any of the IDEs right now. I still find myself needing to use pyenv or uv to manage environments, and it’s a distraction that provides no value.

on the death of stack overflow - my question is, where will "new information" come from? there are always going to be newer and more efficient ways of doing things. And that knowledge needs to get indexed into the LLMs somehow. as long as stack overflow was active, that contributed to this alpha.

training the models on cursor / windsurf questions / code can lead to some kind of staleness and statis.

Have you tried Replit? https://replit.com/ It's also in that middle space where you can deploy on their infra, with some built-in components, and where you can go anywhere from vibe coding and never looking at the code, to using it more like Cursor where you just ask for help from time to time.