Late last year, I wrote the precursor to this post, talking about how using a semantic layer with an LLM, to provide access to data for self-serve analytics, is far superior to text-to-SQL and is really the only way to enable something good enough for business.

We told you so

I’ve written about the semantic layer… a lot. I think only people who build and sell them write about them more. I’ve been saying for some time that, for true value in data to be had with LLMs, we need to use semantic layers with them. I am also just one voice of many:

At the time, I felt a bit like a prophet in the wilderness - not really heard. The data.world benchmark that we replicated at Delphi Labs was the first time that we could empirically prove how much better this method was.

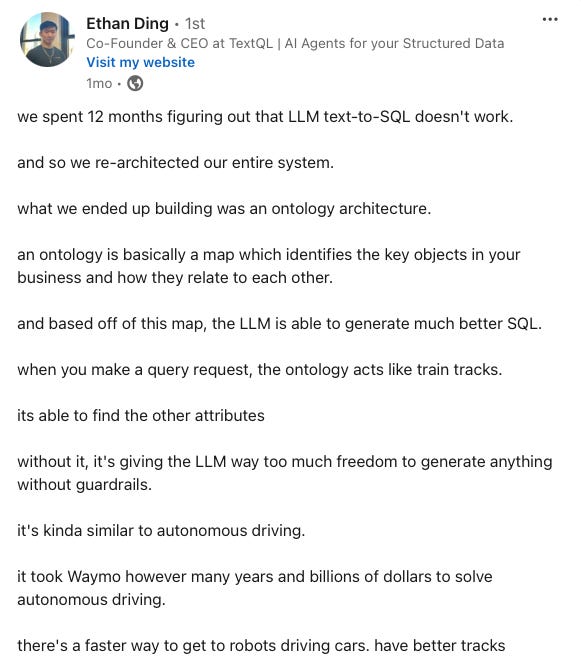

The best part of a year has passed and it seems like people are coming round to our way of thinking and that text-to-SQL is now dead:

Ethan’s post shows that even founders who tried to pursue this avenue have realised that this method will never work and have gone onwards to deploy their capital in a better way.

My theory is that, even if we could get it to work perfectly, it’s not transparent enough to be accepted:

A Darker Truth

It was about a year ago that I started the pre-seed fundraising process for Delphi. All of the VCs I spoke to asked me why we would succeed vs all the other entrants in the space. The vast majority of these entrants were using a method called “text-to-SQL”,…

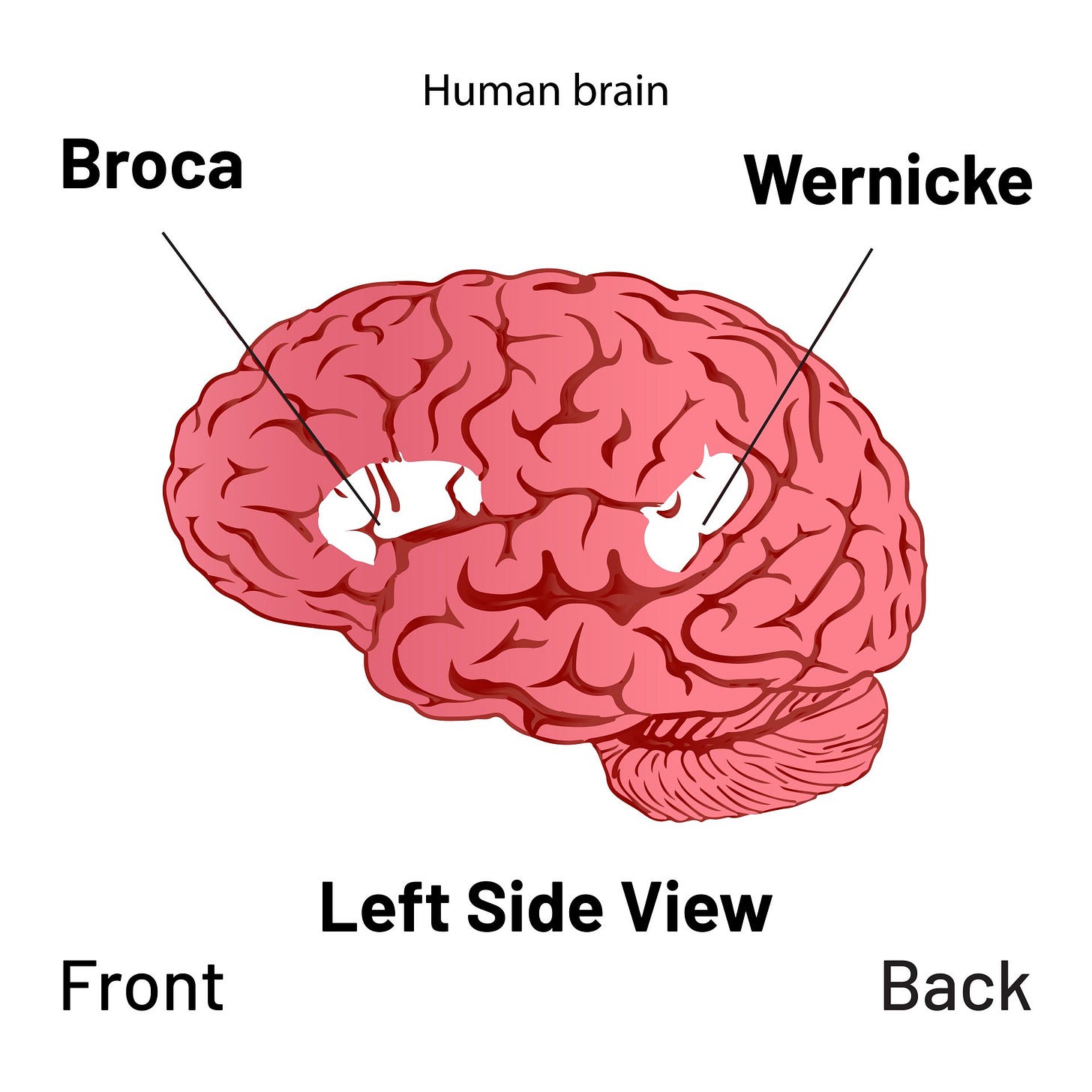

I often think about LLMs in the context of the human brain. The human brain actually has two small components (Broca, Wernicke) see below, that perform the function of today’s LLMs. What tech companies who produce open-source or proprietary LLMs are doing today in making bigger and better LLMs, is kind of like hoping that growing giant Broca and Wernicke components will compensate for the lack of the rest. I don’t think this is true - the other parts of the brain that handle simulation, memory, decision making, strategy, spatial, mathematics and many more things are fundamentally different.

The reason I could learn SQL in a few weeks when I was 22, with just a lowly 4m parameter brain where most of these parameters weren’t used for learning SQL1, is how much the rest of the parts of my brain had to do with it. Until we start making AI that is more than just Broca/Wernicke on steroids, I don’t think we’ll be able to make AI that can really plan, learn and strategise to the level a human can. It looks like OpenAI are also beginning to think this way.

The reason why people are talking about an AI winter again is because there have been a lot of toy products created. They’re fun and all, but if you make toys, people will play and not increase productivity. If you make tools and machines, then you can increase productivity, but it takes real engineering work to do this. Both on the vendor and client side. In the data world, that means doing the necessary data engineering work, which includes data modeling on the client side, and building a tool which constrains the LLM to do something specific and useful on the vendor side that is consistent, transparent and rarely hallucinates.

Knowledge graphs and context are now accepted as necessary

An LLM alone is like a super-smart analyst who is new to your data organization — they lack the semantic context about the nuances of your data. Given only the raw schema, it’s challenging for any analyst to write SQL accurately to answer data questions. This is because raw schemas are often messy and lack the semantic information needed for accurate data analysis.

Moreover, there’s typically a gap between the vocabulary used in business users’ questions and the database schema. Business users’ vocabulary aligns more with business terms, while the database schema vocabulary is closer to the ETL pipelines. This gap makes it difficult to build a product that answers data questions with high accuracy. In addition, data teams tend to be comprehensive — a raw table may include multiple versions of “sales” number, while you only want to present one version to your end business users.

With the excitement around LLMs, the BI industry started a new wave of incorporating AI assistants into BI tools to try and solve this problem. Unfortunately, while these offerings are promising in concept and make for impressive product demos, they tend to fail in the real world. When faced with the messy data, ambiguous language, and nuanced complexities of actual data analysis, these "bolt-on" AI experiences struggle to deliver useful and accurate answers.

The reality is that it's not enough to just point an LLM at a database schema and do text-to-SQL, because the schema itself is missing a lot of knowledge, like definitions of business processes and metrics, or how to handle messy data. The other approach is to capture this understanding in formal semantic models, but they require significant up-front investment, can't capture all the nuances, and are impractical to keep up-to-date as data and business processes evolve.

Snowflake and Databricks now both expect to take semantic context for AI on data to function. Unity Catalog can accept input from semantic layers, and it seems as though Databricks AI/BI is harvesting semantic information from your query history, to then feed into prompt engineering. Snowflake have released their own semantic YAML format.

However, both have shied away from making a deterministic compiler that takes a request in objects from the semantic layer and translates this into complex executable SQL. It’s not hard to see why they haven’t wanted to do it - these compilers are difficult=expensive to build. If you can get some lift without building them, then it’s a natural thing to try. GCP decided to buy rather than build.

Using semantic information only as context for RAG will help, to a point. As we saw previously with data.world’s benchmark - you get a big lift with a knowledge graph, but it only takes you to about 50% accuracy under ideal circumstances. This isn’t good enough for business.

The LLM must be forced to choose from terms that exist and not generate SQL. The LLM should generate something as close to natural language as possible for a request - after all, that is what they have been trained to do.

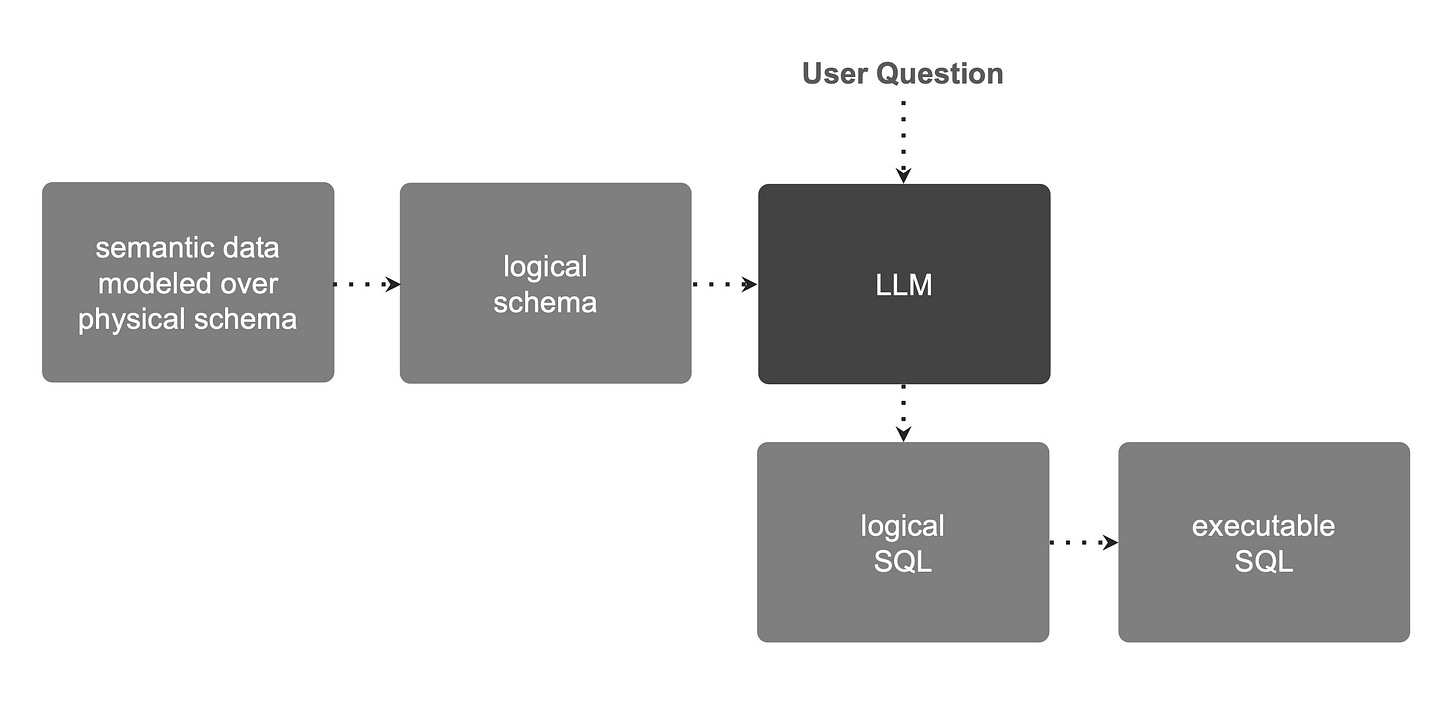

Human question (sounds simple right?).

LLM with RAG generates a simple request using terms from the semantic layer, telling the human what it is doing in these terms, which are understandable to the human.

The simple request is deterministically compiled to generate complex, executable SQL to run on the data warehouse (there are no simple data questions!).

There is just no way that you could reliably and consistently jump from step 1 to step 3, even if people are suggesting they can. This is even with a “simple” query on a small, clean data model.

They were mostly occupied with other stuff, like homeostasis