Dabbling with Dagster - Part 1

Dissecting the Octopus

I recently wrote a series about Airflow, having never touched it before, and never having really used a full data stack orchestrator before.

I say ‘full data stack orchestrator’, as I have used dbt Cloud for scheduling dbt jobs to run for a few years. From an orchestration point of view, dbt Cloud is an almost no-code platform with only dbt CLI commands in jobs (ignoring the dbt transformation code itself).

There are two new orchestrators on the scene who purport to be the next generation of orchestration tool, and thus superior to Airflow: Prefect and Dagster. Both are open-source with cloud-hosted versions. In this series, I will take a closer look at Dagster. If I have time in the future, I may well also take a look at Prefect.

Why Dagster?

There are a number of things attracting me to looking at Dagster at this time:

The difficulties I had in using Airflow with MWAA, which I documented fairly thoroughly in my series on it.

Sandy Ryza’s excellent post on Software-Defined Assets, which is one of the first posts on this way of thinking about data assets - focusing on delivering the data asset wanted, rather than ordering and managing tasks in sequence. There’s more on his post below and I have also written about it, as have others. However, this is the first time I saw it practically applied. I have also since seen Fivetran take a similar approach.

Dagster’s recent launch of Dagster Cloud - as a data team of one, I would much prefer to use an affordable cloud service than self-host software for myself. It just makes sense in terms getting things done quickly and not having to look after too many things at the same time.

As far as I know, Dagster is the only full stack data orchestrator available that lets you look at individual dbt models as data assets within a larger job. I need to learn more about this functionality, but I’m excited to see what is possible. Fivetran’s implementation also allows for this, but is obviously limited in scope in relation to their platform. Dagster allows for much greater flexibility.

Here’s a key quote from Sandy’s post:

In DevOps, we care about servers. In frontend engineering, we care about UI components. In data, we care about data assets. A data asset is typically a database table, a machine learning model, or a report —a persistent object that captures some understanding of the world. Creating and maintaining data assets is the reason we go to all the trouble of building data pipelines. Assets are the interfaces between different teams. They’re the objects that we inspect when we want to debug problems. Ultimately, they're the products that we as data practitioners build for the rest of the world.

The world of data needs a new spanning abstraction: the software-defined asset, which is a declaration, in code, of an asset that should exist. Defining assets in software enables a new way of managing data that makes it easier to trust, easier to organize, and easier to change. The seat of the asset definition is the orchestrator: the system you use to manage change should have assets as a primary abstraction, and is in the best position to act as their source of truth.

Apart from data contracts (which I’m sure I’ll delve into in another post), I feel this new paradigm of focusing on the data asset we want delivered, with SLAs around it, is one of the most important shifts in thinking we’ve had in some time. It’s also a real challenge - a lot of our systems (Airflow included) don’t allow you to operate in this way.

Access

Dagster takes a PLG approach, which I appreciate:

You don’t need to speak to anyone to try the platform

The onboarding process was largely smooth and clear (save some strange Github lag)

You get a 30 day free trial on either Cloud product

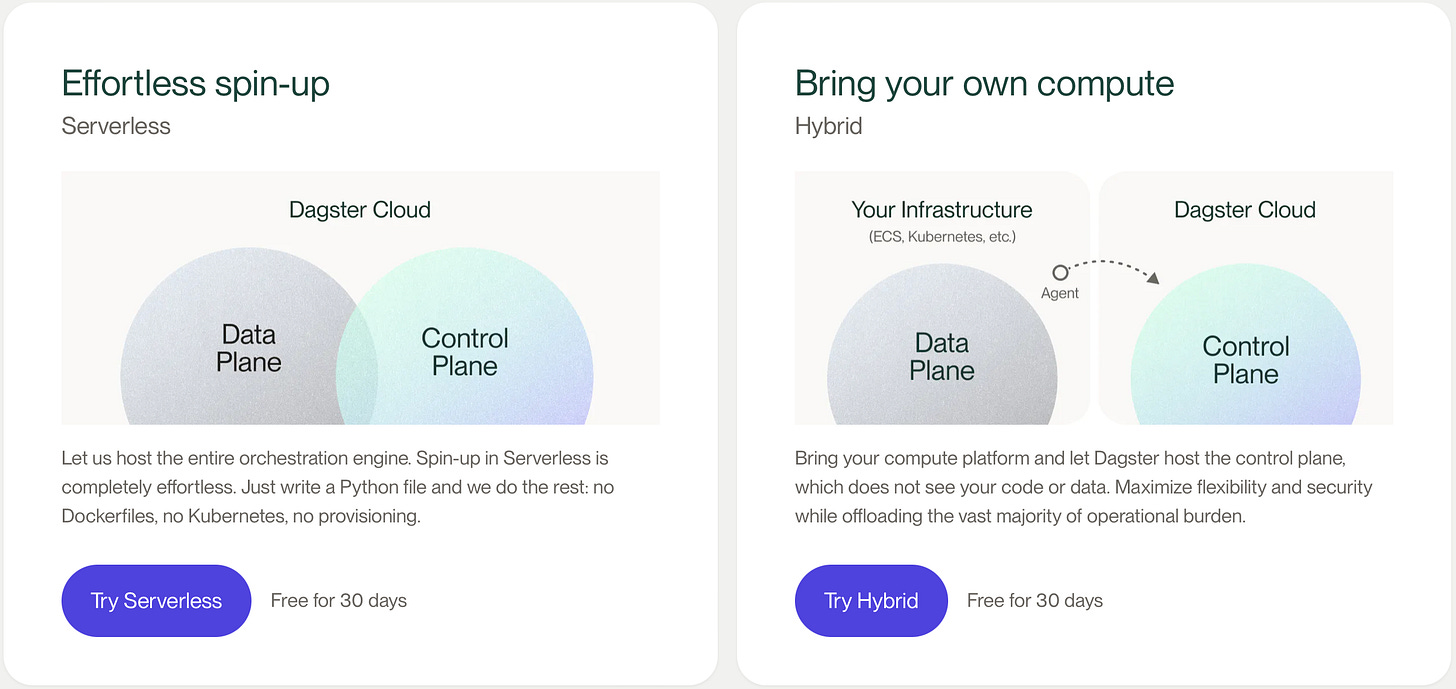

Dagster Cloud has two versions: Serverless and Hybrid. Hybrid lets you run your operations on your own infrastructure, leaving only the control plane in Dagster Cloud infrastructure. The main benefit of opting for Hybrid is if you are constrained by security concerns which preclude you from allowing any other parties access to your data. This will be really useful for data teams and consultancies who work with Healthcare, PII and PCI data.

For my use cases and security needs, the Serverless option is absolutely fine. It is slightly more expensive than Hybrid, but I’m pretty sure that I wouldn’t make much (if any) saving by using our AWS account to host, especially once you add in the extra configuration time. The fact that it’s priced per compute minute is excellent compared to what I faced with MWAA. MWAA would run for about 10 mins a day to run my Fivetran connectors and dbt Cloud job, but I had to pay for an instance for the whole day. Even on the smallest instance, which I was on, that costs about $12 per day. That’s compared to the 40 cents I will most likely pay with Dagster Cloud Serverless (assuming that the run time will be similar).

The sign up process was mostly pretty straightforward:

Choose Serverless or Hybrid ( clicking ‘try Serverless’ on the previous page just routes you to a generic onboarding).

Going through the GitHub integration - this was the only part where I had a few short, slight snags. I needed a Metaplane GitHub org owner to approve installing the Dagster Cloud app. When they did it, they got a 401 but it still seemed to work soon after on my end. A really helpful thing that happens at this point is that you can set up a starter template repo in your GitHub from within the Dagster Cloud onboarding process. Again, there was the odd snag and lag where the repo got created and there was temporarily an error message on the onboarding flow, but within a few seconds it had resolved itself.

The third step is simply to try to kick off a run on the template DAG.

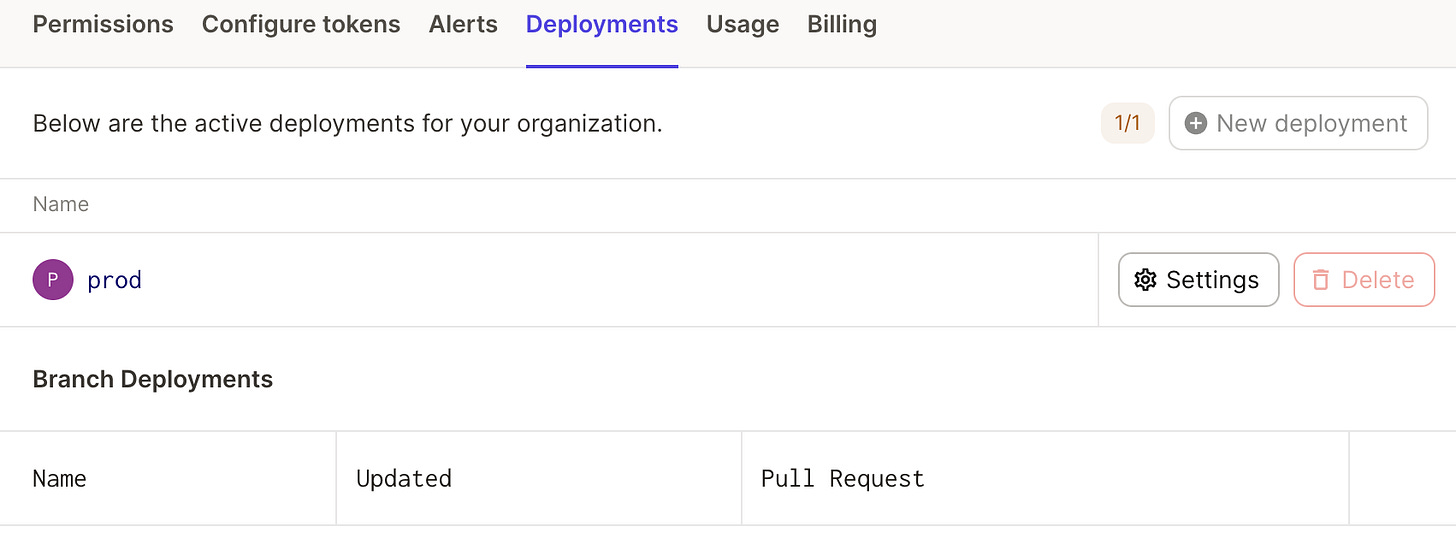

The fourth and final step of the onboarding flow is to make a branch deployment. I’ll go into more detail on this below.

So, in reality, the onboarding was finished within two steps. Steps three and four are simply there to briefly familiarise the user with the platform.

Branch Deployments

One of the major problems I had with MWAA was the lack of ability to create a development environment with which to make changes. It was possible, but I have previously described the complexity involved with setup, which, to be honest, is beyond many data practitioners.

This is where Dagster Cloud really shines so far - it has the concept of deployments (which are like environments). You start off with one prod deployment. You need to upgrade to enterprise to get more, but this isn’t a problem for me or many other teams. Whenever a PR is made to your Dagster repo in GitHub, a new deployment for the branch is created automatically in Dagster!

So far so good

The thing that is so different with using Dagster Cloud vs Airflow, so far, is that I’m not wondering what to do:

I don’t have to choose which hosted version (beyond Serverless and Hybrid)

I don’t have to worry about infrastructure

I don’t have to find an example repo to start from

I don’t have to worry about how to get the instance to get code from the repo

I don’t have to worry about how to keep the code in sync with my development

I don’t have to think about how to set up different environments

I don’t have to worry about taking down my prod environment to refresh the code it’s running on

I know that the next thing for me to do is to start developing in my branch, so I can see if what I’ve made works on the branch deployment. This tight and automated flow of development with CICD is how I want to work.

I’m beginning to feel that Data Engineers have got so used to dealing with the baggage around Airflow that they can’t see it’s there any more.

Next

In the next posts in this series I will first try to recreate the DAG I had in Airflow (it’s possible to import Airflow DAGs, but I will also simply try to make it again as it’s relatively simple). I will also attempt to make some dbt models into Software-Defined Assets and experience what this is like in operation, especially compared to how it works in Fivetran’s Transformations feature.

I’ll also evaluate whether my 40 cents per day cost estimation holds up.

Eager for the Prefect review!

You may also want to check out Flyte in case your data orchestration is complex and compute-intensive.