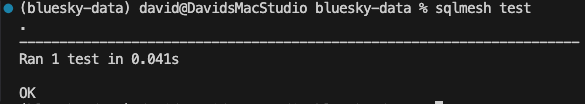

sqlmesh test

unit tests, generate external models and macros

I covered sqlmesh audits yesterday, and these resemble the original dbt tests, which are not unit tests from software engineering but are more similar to data tests. You assert expectations about your data, such as a certain column must be non-null, unique, and contain specific values. When the dbt test/sqlmesh audit is executed, it runs a simple query to verify that this assertion is true. The assertion is about the data, not the function. The data not meeting expectations could indicate an issue with the function, but it might not! 😩

Breaking and Non-Breaking Changes

Yesterday, I covered how sqlmesh virtual environments work and how they are a foundation for many of the benefits of using sqlmesh:

True unit tests in a data transformation tool should differ from sqlmesh audits and dbt tests. Unit tests demonstrate that a function performs as expected with specific fixed data (fixtures). In the context of tools like dbt and sqlmesh, unit tests could verify that a model, a portion of a model (such as one case when statement or calculation for a column), or a macro operates correctly with pre-defined fixtures. Therefore, if these “functions” change during development, the unit test can ensure they still function as intended without the complication of real-world data obscuring whether the result is correct. The assertion is about the function, not the data. If the test fails, the function is wrong… or the test needs to be changed to reflect the function’s new role.

I covered dbt’s unit tests before they were made GA early this year:

Unit Tests

In computer programming, unit testing is a software testing method by which individual units of source code—sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures—are tested to determine whether they are fit for use.

They have since been released as part of dbt Core v1.8+. They are pretty much the same as when I wrote about it:

They require generating fixtures for each test in another YAML file. This generation is not currently automated, and creating these fixtures is non-trivial. So I can imagine that uptake isn’t as high as it should be.

They run on your data warehouse and need the parents of the model tested to exist in the warehouse. They do have a CLI command to help you build empty versions of the parent models in the warehouse to save money. However, this is a strange thing to need to do. Unit testing everywhere else does not require anything except the fixtures as data and the function defined.

They still do not support testing macros; however, I imagine that if the macro is used in the model, it can be tested this way. However, this method is indirect and will make debugging significantly harder.

Overall, a worthy addition to dbt Core that people have been requesting since the original data tests were released.

So how do they work in sqlmesh? As you might expect, they operate in a quite similar manner, but there are a few excellent features that enhance the quality of life for analytics engineers using them. They also do not support macros at this time.

Firstly, they can be generated automatically using the create_test CLI command, which I will demonstrate on one of the Bluesky models. You need to add some basic queries to pull the columns you require from the parent models into the test fixtures when executing the command:

sqlmesh create_test raw_http_sqlmesh.dim_profiles --query raw_http_sqlmesh.incremental_followers "select * from raw_http_sqlmesh.incremental_followers limit 3" --query raw_http_sqlmesh.incremental_follows "select * from raw_http_sqlmesh.incremental_follows limit 3"

By default, it generates the fixtures file in the tests folder, but you can specify this along with a few other details as options for the command.

You can also run sqlmesh tests on a different query engine, notably a local one like DuckDB. This means that if unit tests fail during development or in a production run, the warehouse may never start up. Most cloud data warehouses incur costs as soon as they are initiated, even if they don't perform any tasks. Running locally is also nearly instantaneous, which helps maintain that developer zen flow.

sqlmesh create_external_models

To document source tables not managed by dbt, sources must be specified in yet another YAML file. They need to be entered manually, although third-party utils packages can automatically generate sources for models.

In sqlmesh, it’s as simple as running the CLI command sqlmesh create_external_models. This command generates YAML, but I can imagine never having to edit these external model YAML files manually. I would simply run the create_external_models command when I build models that reference new external sources and perhaps even incorporate it into the CD process to ensure that all external models used in the project exist.

Macros

sqlmesh supports both Jinja macros that work very similarly to dbt macros but also have their own style that don’t require the Jinja start and end lines to use and are therefore much nicer. You don’t need to worry about what they will have done to the structure of the SQL because of pushing things onto separate lines and generated spaces. Just use them like you would another SQL object.

You can also have Python-defined macros:

There are also many useful out-of-the-box macros that address a significant portion of why individuals import dbt_utils into dbt projects.